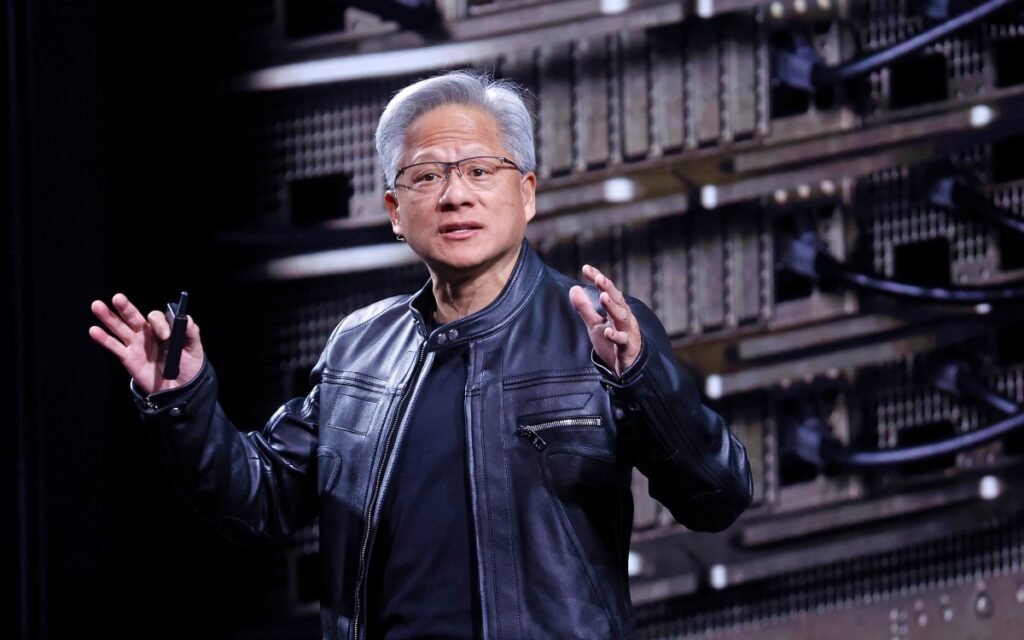

At this time on the Client Electronics Present, Nvidia CEO Jensen Huang formally launched the corporate’s new Rubin computing structure, which he described because the cutting-edge in AI {hardware}. The brand new structure is at present in manufacturing and is anticipated to ramp up additional within the second half of the 12 months.

“Vera Rubin is designed to handle this basic problem that we’ve got: The quantity of computation crucial for AI is skyrocketing.” Huang informed the viewers. “At this time, I can let you know that Vera Rubin is in full manufacturing.”

The Rubin structure, which was first introduced in 2024, is the most recent results of Nvidia’s relentless {hardware} improvement cycle, which has remodeled Nvidia into essentially the most worthwhile company on the earth. The Rubin structure will exchange the Blackwell structure, which in flip, changed the Hopper and Lovelace architectures.

Rubin chips are already slated to be used by almost each main cloud supplier, together with high-profile Nvidia partnerships with Anthropic, OpenAI, and Amazon Web Services. Rubin programs will even be utilized in HPE’s Blue Lion supercomputer and the upcoming Doudna supercomputer at Lawrence Berkeley Nationwide Lab.

Named for the astronomer Vera Florence Cooper Rubin, the Rubin structure consists of six separate chips designed for use in live performance. The Rubin GPU stands on the heart, however the structure additionally addresses rising bottlenecks in storage and interconnection with new enhancements within the Bluefield and NVLink, programs respectively. The structure additionally features a new Vera CPU, designed for agentic reasoning.

Explaining the advantages of the brand new storage, Nvidia’s senior director of AI infrastructure options Dion Harris pointed to the rising cache-related reminiscence calls for of recent AI programs.

“As you begin to allow new forms of workflows, like agentic AI or long-term duties, that places quite a lot of stress and necessities in your KV cache,” Harris informed reporters on a name, referring to a reminiscence system utilized by AI fashions to condense inputs. “So we’ve launched a brand new tier of storage that connects externally to the compute gadget, which lets you scale your storage pool rather more effectively.”

Techcrunch occasion

San Francisco

|

October 13-15, 2026

As anticipated, the brand new structure additionally represents a major advance in velocity and energy effectivity. Based on Nvidia’s exams, the Rubin structure will function three and a half occasions quicker than the earlier Blackwell structure on model-training duties and 5 occasions quicker on inference duties, reaching as excessive as 50 petaflops. The brand new platform will even assist eight occasions extra inference compute per watt.

Rubin’s new capabilities come amid intense competitors to construct AI infrastructure, which has seen each AI labs and cloud suppliers scramble for Nvidia chips in addition to the amenities essential to energy them. On an earnings name in October 2025, Huang estimated that between $3 trillion and $4 trillion might be spent on AI infrastructure over the following 5 years.

Observe together with all of TechCrunch’s protection of the annual CES convention right here.