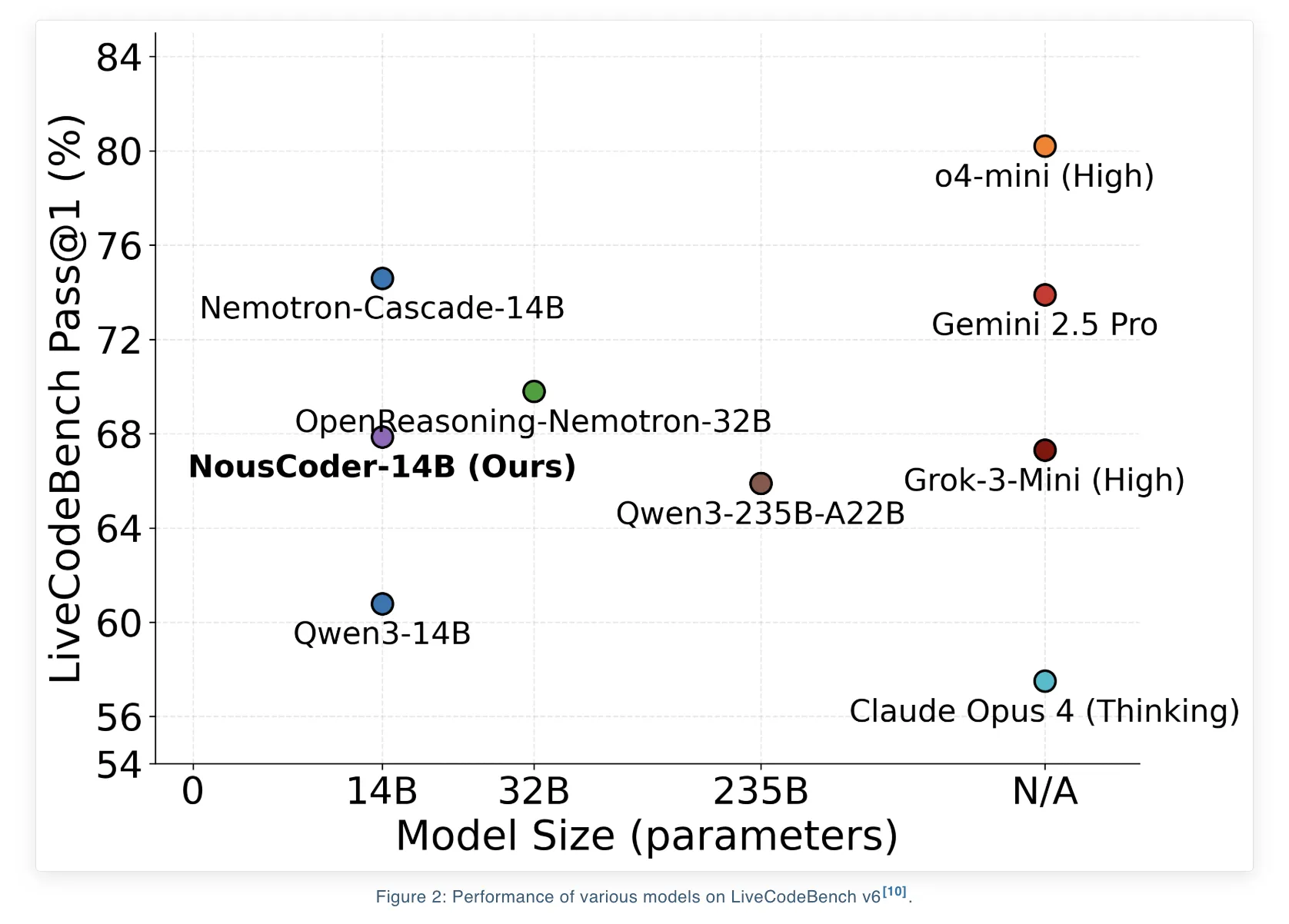

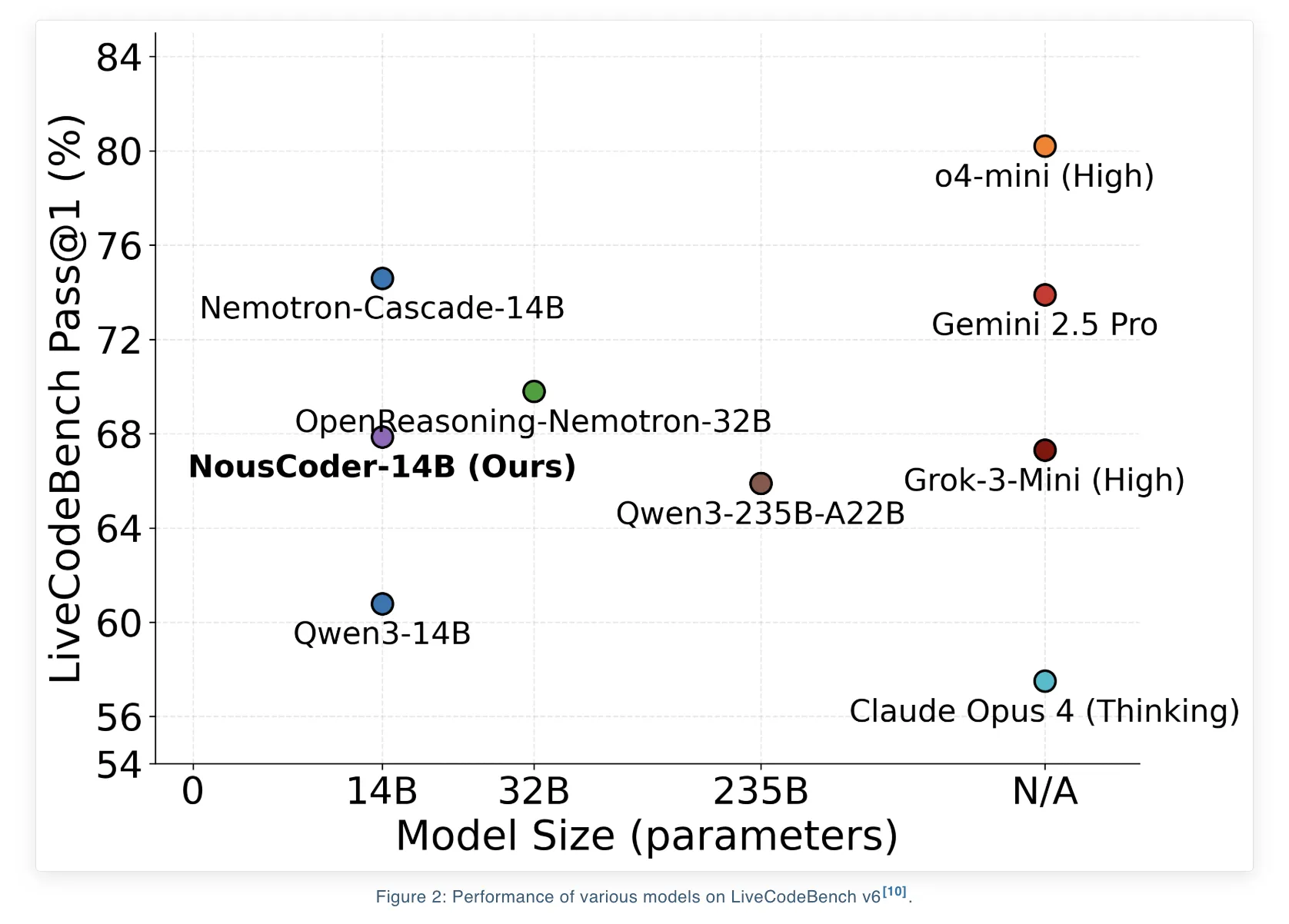

Nous Analysis has launched NousCoder-14B, a aggressive olympiad programming mannequin that’s put up skilled on Qwen3-14B utilizing reinforcement studying (RL) with verifiable rewards. On the LiveCodeBench v6 benchmark, which covers issues from 08/01/2024 to 05/01/2025, the mannequin reaches a Move@1 accuracy of 67.87 %. That is 7.08 proportion factors greater than the Qwen3-14B baseline of 60.79 % on the identical benchmark. The analysis staff skilled the mannequin on 24k verifiable coding issues utilizing 48 B200 GPUs over 4 days, and launched the weights beneath the Apache 2.0 license on Hugging Face.

Benchmark focus and what Move@1 means

LiveCodeBench v6 is designed for aggressive programming analysis. The take a look at break up used right here comprises 454 issues. The coaching set makes use of the identical recipe because the DeepCoder-14B mission from Agentica and Collectively AI. It combines issues from TACO Verified, PrimeIntellect SYNTHETIC 1, and LiveCodeBench issues created earlier than 07/31/2024.

The benchmark solely contains aggressive programming type duties. For every downside, an answer should respect strict time and reminiscence limits and should move a big set of hidden enter output checks. Move@1 is the fraction of issues the place the primary generated program passes all checks, together with time and reminiscence constraints.

Dataset development for execution primarily based RL

All datasets used for coaching are composed of verifiable code technology issues. Every downside has a reference implementation and lots of take a look at circumstances. The coaching set comprises 24k issues drawn from:

- TACO Verified

- PrimeIntellect SYNTHETIC 1

- LiveCodeBench issues that come earlier than 07/31/2024

The take a look at set is LiveCodeBench v6, which has 454 issues between 08/01/2024 and 05/01/2025.

Each downside is a whole aggressive programming activity with an outline, enter format, output format, and take a look at circumstances. This setup is essential for RL as a result of it offers a binary reward sign that’s low-cost to compute as soon as the code has run.

RL setting with Atropos and Modal

The RL setting is constructed utilizing the Atropos framework. NousCoder-14B is prompted utilizing the usual LiveCodeBench immediate format, and it generates Python code for every downside. Every rollout receives a scalar reward that relies on take a look at case outcomes:

- Reward 1 when the generated code passes all take a look at circumstances for that downside

- Reward −1 when the code outputs a flawed reply, exceeds a 15 second time restrict, or exceeds a 4 GB reminiscence restrict on any take a look at case

To execute untrusted code safely and at scale, the staff makes use of Modal as an autoscaled sandbox. The system launches one Modal container per rollout in the principle design that the analysis staff describes because the used setting. Every container runs all take a look at circumstances for that rollout. This avoids mixing coaching compute with verification compute and retains the RL loop steady.

The analysis staff additionally pipelines inference and verification. When an inference employee finishes a technology, it sends the completion to a Modal verifier and instantly begins a brand new technology. With many inference employees and a set pool of Modal containers, this design retains the coaching loop inference compute sure as an alternative of verification sure.

The staff discusses 3 verification parallelization methods. They discover one container per downside, one per rollout, and one per take a look at case. They lastly keep away from the per take a look at case setting due to container launch overhead and use an method the place every container evaluates many take a look at circumstances and focuses on a small set of the toughest take a look at circumstances first. If any of those fail, the system can cease verification early.

GRPO goals, DAPO, GSPO, and GSPO+

NousCoder-14B makes use of Group Relative Coverage Optimization (GRPO) which doesn’t require a separate worth mannequin. On prime of GRPO the analysis staff take a look at 3 goals: Dynamic sAmpling Coverage Optimization (DAPO), Group Sequence Coverage Optimization (GSPO), and a modified GSPO variant referred to as GSPO+.

All 3 goals share the identical definition of benefit. The benefit for every rollout is the reward for that rollout normalized by the imply and commonplace deviation of rewards contained in the group. DAPO applies significance weighting and clipping on the token degree, and introduces three primary adjustments relative to GRPO:

- A clip greater rule that will increase exploration for low likelihood tokens

- A token degree coverage gradient loss that offers every token equal weight

- Dynamic sampling, the place teams which can be all right or all incorrect are dropped as a result of they carry zero benefit

GSPO strikes the significance weighting to the sequence degree. It defines a sequence significance ratio that aggregates token ratios over the entire program. GSPO+ retains sequence degree correction, but it surely rescales gradients in order that tokens are weighted equally no matter sequence size.

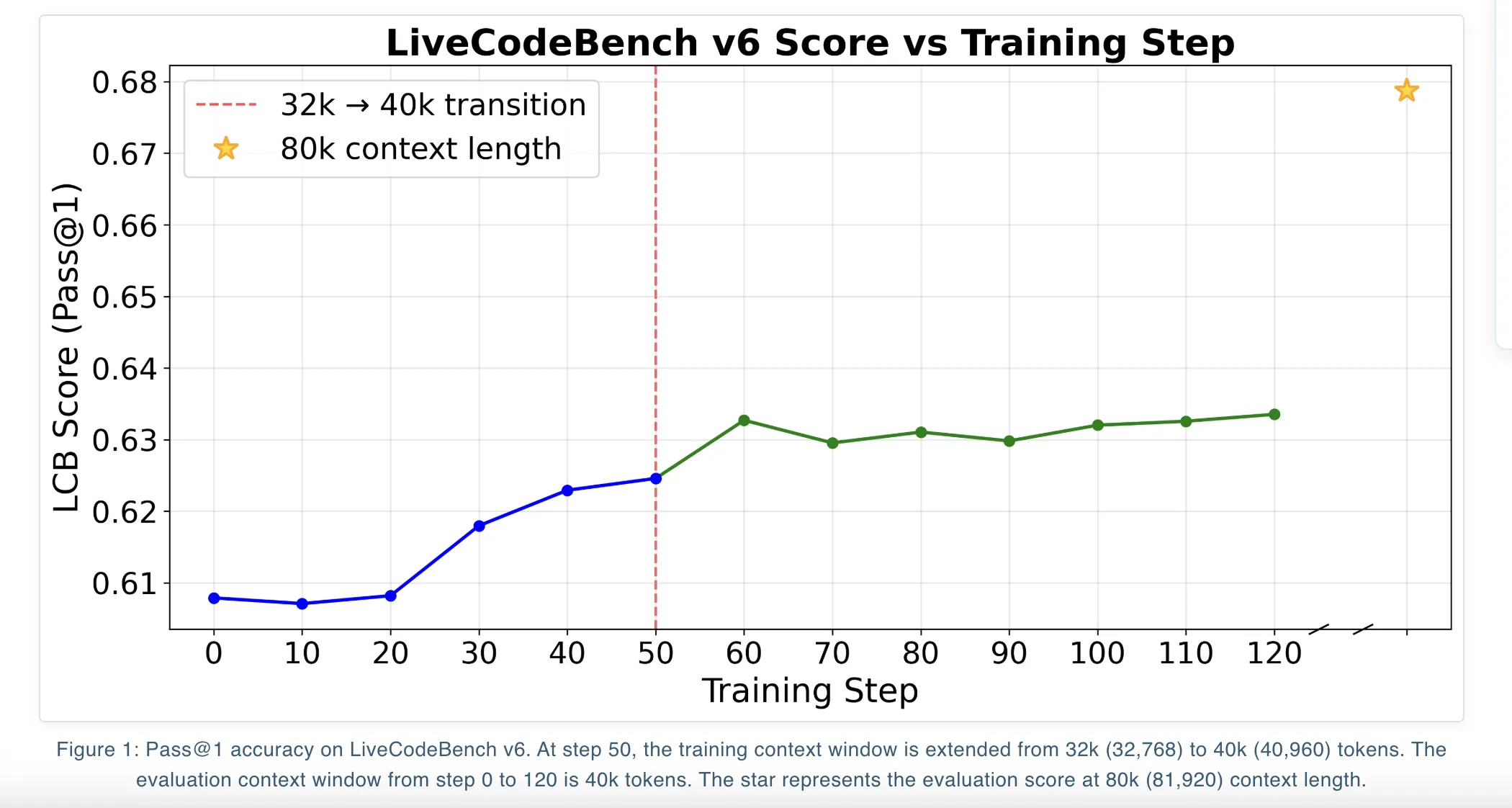

On LiveCodeBench v6, the variations between these goals are modest. At a context size of 81,920 tokens, DAPO reaches a Move@1 of 67.87 % whereas GSPO and GSPO+ attain 66.26 % and 66.52 %. At 40,960 tokens, all 3 goals cluster round 63 % Move@1.

Iterative context extension and overlong filtering

Qwen3-14B helps lengthy context and the coaching follows an iterative context extension schedule. The staff first trains the mannequin with a 32k context window after which continues coaching on the most Qwen3-14B context window of 40k. At every stage they choose the checkpoint with the very best LiveCodeBench rating at 40k context after which use YaRN context extension at analysis time to succeed in 80k tokens, that’s 81,920 tokens.

A key trick is overlong filtering. When a generated program exceeds the utmost context window, they reset its benefit to zero. This removes that rollout from the gradient sign somewhat than penalizing it. The analysis staff report that this method avoids pushing the mannequin towards shorter options for purely optimization causes and helps keep high quality once they scale context size at take a look at time.

Key Takeaways

- NousCoder 14B is a Qwen3-14B primarily based aggressive programming mannequin skilled with execution primarily based RL, it reaches 67.87 % Move@1 on LiveCodeBench v6, a 7.08 proportion level acquire over the Qwen3-14B baseline of 60.79 % on the identical benchmark.

- The mannequin is skilled on 24k verifiable coding issues from TACO Verified, PrimeIntellect SYNTHETIC-1, and pre 07 31 2024 LiveCodeBench duties, and evaluated on a disjoint LiveCodeBench v6 take a look at set of 454 issues from 08/01/2024 to 05/01/2025.

- The RL setup makes use of Atropos, with Python options executed in sandboxed containers, a easy reward of 1 for fixing all take a look at circumstances and minus 1 for any failure or useful resource restrict breach, and a pipelined design the place inference and verification run asynchronously.

- Group Relative Coverage Optimization goals DAPO, GSPO, and GSPO+ are used for lengthy context code RL, all function on group normalized rewards, and present comparable efficiency, with DAPO reaching the very best Move@1 on the longest 81,920 token context.

- The coaching makes use of iterative context extension, first at 32k then at 40k tokens, together with YaRN primarily based extension at analysis time to 81,920 tokens, contains overlong rollout filtering for stability, and ships as a completely reproducible open stack with Apache 2.0 weights and RL pipeline code.

Take a look at the Model Weights and Technical details. Additionally, be happy to comply with us on Twitter and don’t overlook to hitch our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its recognition amongst audiences.