Microsoft this week deployed its first crop of its homegrown AI chips in one in all its information facilities, with plans to roll out extra within the coming months, it says.

The chip, named the Maia 200, is designed to be what Microsoft calls an “AI inference powerhouse,” which means it’s optimized for the compute-intensive work of operating AI fashions in manufacturing. The corporate launched some spectacular processing-speed specs for Maia, saying it outperforms Amazon’s newest Trainium chips and Google’s newest Tensor Processing Items (TPU).

All the cloud giants are turning to their very own AI chip designs partly due to the issue, and expense, of acquiring the most recent and biggest from Nvidia — a provide crunch that shows no signs of abating.

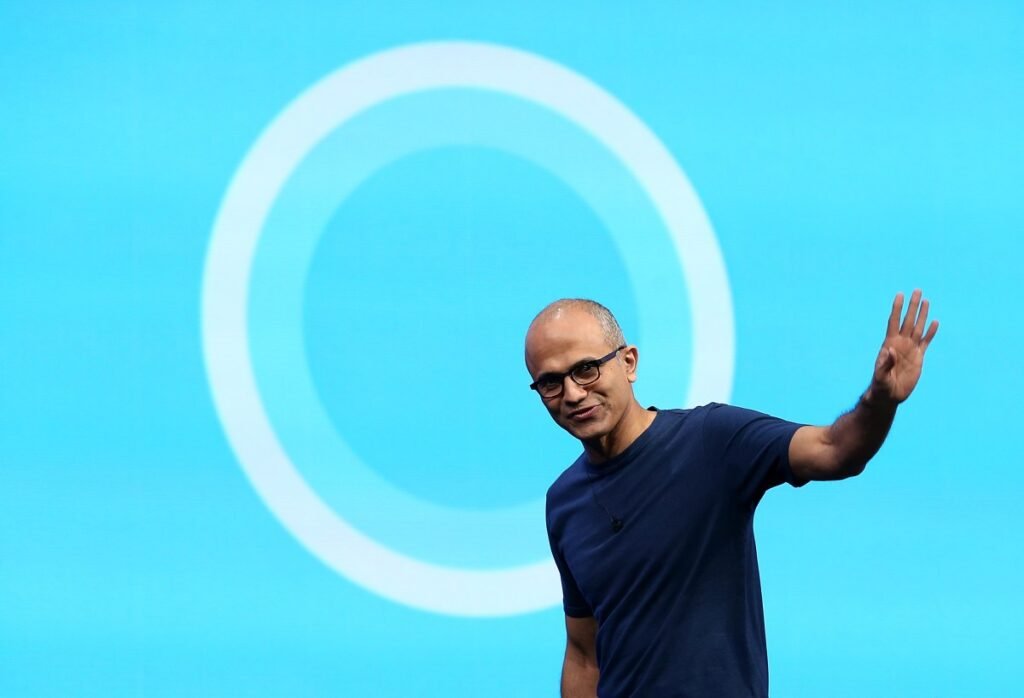

However even with its personal state-of-the-art, high-performance chip in hand, Microsoft CEO Satya Nadella mentioned the corporate will nonetheless be shopping for chips made by others.

“We’ve a fantastic partnership with Nvidia, with AMD. They’re innovating. We’re innovating,” he defined. “I feel numerous of us simply discuss who’s forward. Simply bear in mind, it’s a must to be forward forever to return.”

He added: “As a result of we will vertically combine doesn’t imply we simply solely vertically combine,” which means constructing its personal techniques from high to backside, with out utilizing wares from different distributors.

That mentioned, Maia 200 can be utilized by Microsoft’s personal so-called Superintelligence staff, the AI specialists constructing the software program large’s personal frontier fashions. That’s in accordance with Mustafa Suleyman, the previous Google DeepMind co-founder who now leads the staff. Microsoft is working by itself fashions to maybe in the future reduce its reliance on OpenAI, Anthropic, and different mannequin makers.

Techcrunch occasion

Boston, MA

|

June 23, 2026

The Maia 200 chip may also assist OpenAI’s fashions operating on Microsoft’s Azure cloud platform, the corporate says. However, by all accounts, securing entry to probably the most superior AI {hardware} remains to be a problem for everybody, paying prospects and inside groups alike.

So in a post on X, Suleyman clearly relished sharing the information that his staff will get first dibs. “It’s a giant day,” he wrote when the chip launched. “Our Superintelligence staff would be the first to make use of Maia 200 as we develop our frontier AI fashions.”