Microsoft AI lab formally launched MAI-Voice-1 and MAI-1-preview, marking a brand new section for the corporate’s synthetic intelligence analysis and growth efforts. The announcement explains how Microsoft AI Lab is getting concerned in AI analysis with none third social gathering involvement. MAI-Voice-1 and MAI-1-preview fashions helps distinct however complementary roles in speech synthesis and general-purpose language understanding.

MAI-Voice-1: Technical Particulars and Capabilities

MAI-Voice-1 is a speech era mannequin that produces audio with excessive constancy. It generates one minute of natural-sounding audio in below one second utilizing a single GPU, supporting functions akin to interactive assistants and podcast narration with low latency and {hardware} wants. Try out here

The model uses a transformer-based architecture trained on a diverse multilingual speech dataset. It handles single-speaker and multi-speaker scenarios, providing expressive and context-appropriate voice outputs.

MAI-Voice-1 is integrated into Microsoft products like Copilot Daily for voice updates and news summaries. It is available for testing in Copilot Labs, where users can create audio stories or guided narratives from text prompts.

Technically, the model focuses on quality, versatility, and speed. Its single-GPU operation differs from systems requiring multiple GPUs, enabling integration in consumer devices and cloud applications beyond research settings

MAI-1-Preview: Foundation Model Architecture and Performance

MAI-1-preview is Microsoft’s first end-to-end, in-house foundation language model. Unlike previous models that Microsoft integrated or licensed from outside, MAI-1-preview was trained entirely on Microsoft’s own infrastructure, using a mixture-of-experts architecture and approximately 15,000 NVIDIA H100 GPUs.

Microsoft AI team have made the MAI-1-preview on the LMArena platform, putting it subsequent to a number of different fashions. MAI-1-preview is optimized for instruction-following and on a regular basis conversational duties, making it appropriate for consumer-focused functions fairly than enterprise or extremely specialised use circumstances. Microsoft has begun rolling out entry to the mannequin for choose text-based situations inside Copilot, with a gradual enlargement deliberate as suggestions is collected and the system is refined.

Mannequin Improvement and Coaching Infrastructure

The event of MAI-Voice-1 and MAI-1-preview was supported by Microsoft’s next-generation GB200 GPU cluster, a custom-built infrastructure particularly optimized for coaching massive generative fashions. Along with {hardware}, Microsoft has invested closely in expertise, assembling a crew with deep experience in generative AI, speech synthesis, and large-scale programs engineering. The corporate’s strategy to mannequin growth emphasizes a steadiness between elementary analysis and sensible deployment, aiming to create programs that aren’t simply theoretically spectacular but in addition dependable and helpful in on a regular basis situations.

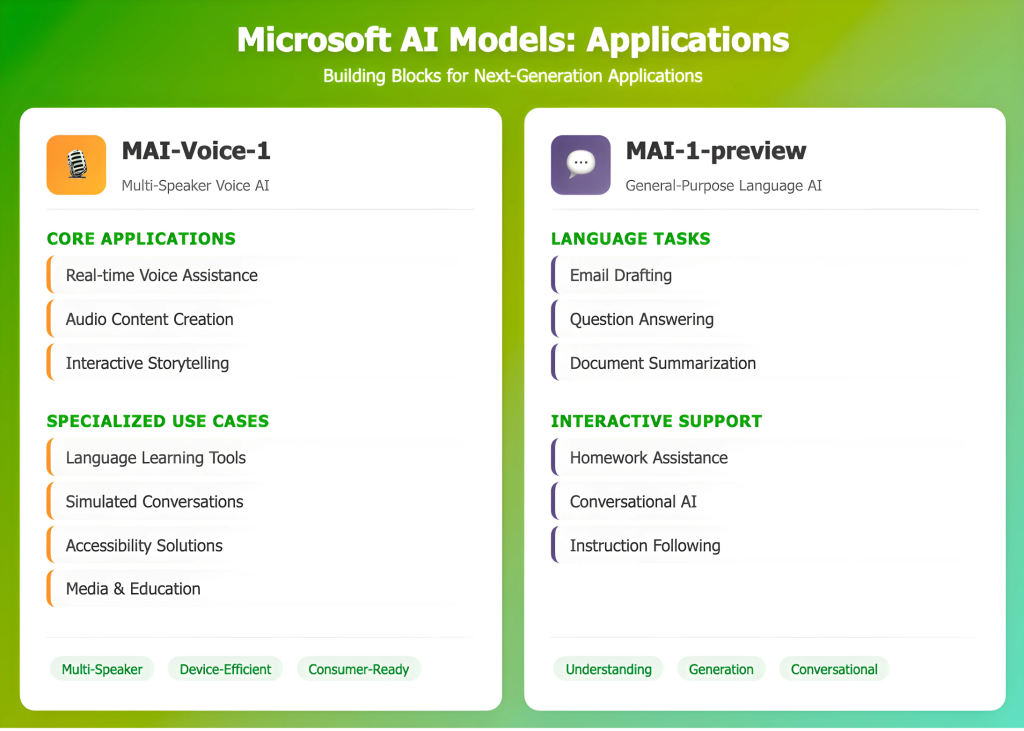

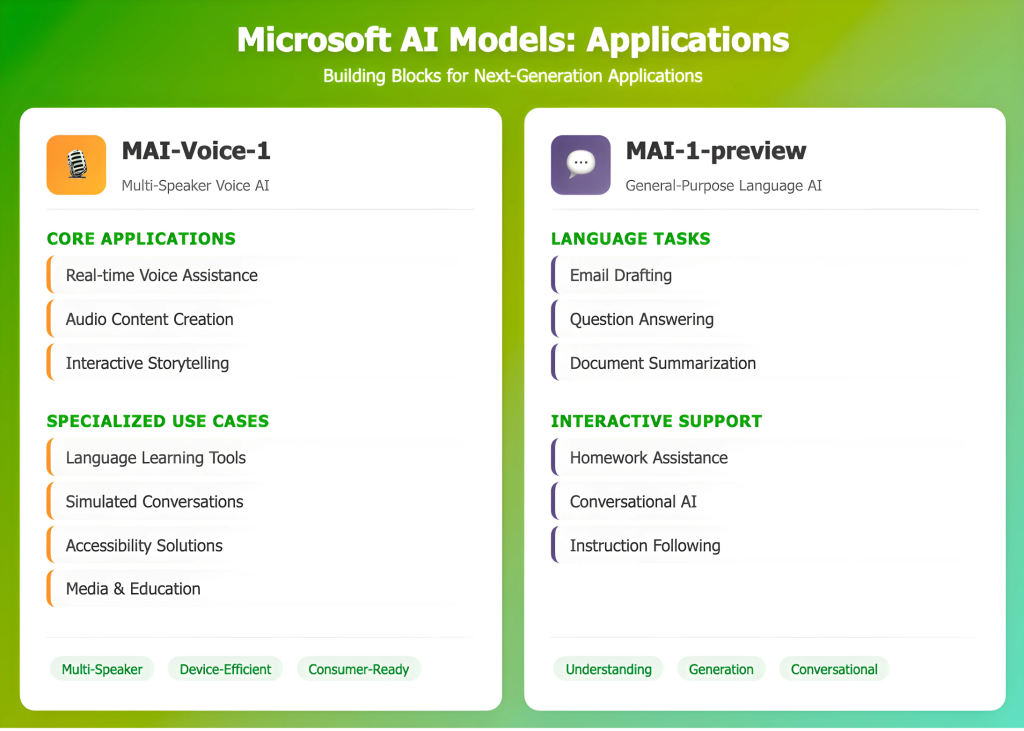

Purposes

MAI-Voice-1 can be utilized for real-time voice help, audio content material creation in media and training, or accessibility options. Its capacity to simulate a number of audio system helps use in interactive situations akin to storytelling, language studying, or simulated conversations. The mannequin’s effectivity additionally permits for deployment on shopper {hardware}.

MAI-1-preview is focused on general language understanding and generation, assisting with tasks like drafting emails, answering questions, summarizing text, or helping with understanding and assisting school tasks in a conversational format.

Conclusion

Microsoft’s release of MAI-Voice-1 and MAI-1-preview shows the company can now develop core generative AI models internally, backed by substantial investment in training infrastructure and technical talent. Both models are intended for practical, real-world use and are being refined with user feedback. This development adds to the diversity of model architectures and training methods in the field, with a focus on systems that are efficient, reliable, and suitable for integration into everyday applications. Microsoft’s approach—using large-scale resources, gradual deployment, and direct engagement with users—offers one example of how organizations can progress AI capabilities while emphasizing practical, incremental improvement.

Check out the Technical details here. Be happy to take a look at our GitHub Page for Tutorials, Codes and Notebooks. Additionally, be at liberty to comply with us on Twitter and don’t neglect to affix our 100k+ ML SubReddit and Subscribe to our Newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its recognition amongst audiences.