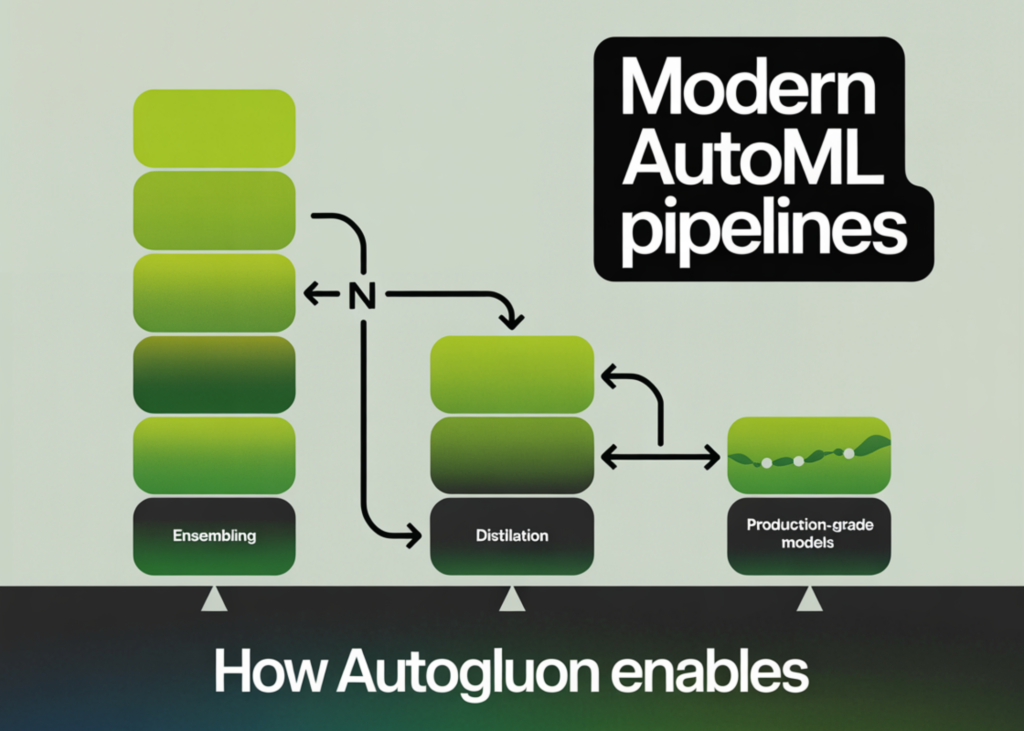

On this tutorial, we construct a production-grade tabular machine studying pipeline utilizing AutoGluon, taking a real-world mixed-type dataset from uncooked ingestion by way of to deployment-ready artifacts. We prepare high-quality stacked and bagged ensembles, consider efficiency with sturdy metrics, carry out subgroup and feature-level evaluation, after which optimize the mannequin for real-time inference utilizing refit-full and distillation. All through the workflow, we give attention to sensible choices that stability accuracy, latency, and deployability. Take a look at the FULL CODES here.

!pip -q set up -U "autogluon==1.5.0" "scikit-learn>=1.3" "pandas>=2.0" "numpy>=1.24"

import os, time, json, warnings

warnings.filterwarnings("ignore")

import numpy as np

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.metrics import roc_auc_score, log_loss, accuracy_score, classification_report, confusion_matrix

from autogluon.tabular import TabularPredictorWe arrange the atmosphere by putting in the required libraries and importing all core dependencies used all through the pipeline. We configure warnings to maintain outputs clear and guarantee numerical, tabular, and analysis utilities are prepared. Take a look at the FULL CODES here.

from sklearn.datasets import fetch_openml

df = fetch_openml(data_id=40945, as_frame=True).body

goal = "survived"

df[target] = df[target].astype(int)

drop_cols = [c for c in ["boat", "body", "home.dest"] if c in df.columns]

df = df.drop(columns=drop_cols, errors="ignore")

df = df.exchange({None: np.nan})

print("Form:", df.form)

print("Goal constructive charge:", df[target].imply().spherical(4))

print("Columns:", listing(df.columns))

train_df, test_df = train_test_split(

df,

test_size=0.2,

random_state=42,

stratify=df[target],

)

We load a real-world mixed-type dataset and carry out mild preprocessing to organize a clear coaching sign. We outline the goal, take away extremely leaky columns, and validate the dataset construction. We then create a stratified prepare–take a look at cut up to protect class stability. Take a look at the FULL CODES here.

def has_gpu():

strive:

import torch

return torch.cuda.is_available()

besides Exception:

return False

presets = "excessive" if has_gpu() else "best_quality"

save_path = "/content material/autogluon_titanic_advanced"

os.makedirs(save_path, exist_ok=True)

predictor = TabularPredictor(

label=goal,

eval_metric="roc_auc",

path=save_path,

verbosity=2

)

We detect {hardware} availability to dynamically choose probably the most appropriate AutoGluon coaching preset. We configure a persistent mannequin listing and initialize the tabular predictor with an applicable analysis metric. Take a look at the FULL CODES here.

begin = time.time()

predictor.match(

train_data=train_df,

presets=presets,

time_limit=7 * 60,

num_bag_folds=5,

num_stack_levels=2,

refit_full=False

)

train_time = time.time() - begin

print(f"nTraining finished in {train_time:.1f}s with presets="{presets}"")We prepare a high-quality ensemble utilizing bagging and stacking inside a managed time funds. We depend on AutoGluon’s automated mannequin search to effectively discover sturdy architectures. We additionally document coaching time to grasp computational value. Take a look at the FULL CODES here.

lb = predictor.leaderboard(test_df, silent=True)

print("n=== Leaderboard (prime 15) ===")

show(lb.head(15))

proba = predictor.predict_proba(test_df)

pred = predictor.predict(test_df)

y_true = test_df[target].values

if isinstance(proba, pd.DataFrame) and 1 in proba.columns:

y_proba = proba[1].values

else:

y_proba = np.asarray(proba).reshape(-1)

print("n=== Check Metrics ===")

print("ROC-AUC:", roc_auc_score(y_true, y_proba).spherical(5))

print("LogLoss:", log_loss(y_true, np.clip(y_proba, 1e-6, 1 - 1e-6)).spherical(5))

print("Accuracy:", accuracy_score(y_true, pred).spherical(5))

print("nClassification report:n", classification_report(y_true, pred))We consider the educated fashions utilizing a held-out take a look at set and examine the leaderboard to match efficiency. We compute probabilistic and discrete predictions and derive key classification metrics. It offers us a complete view of mannequin accuracy and calibration. Take a look at the FULL CODES here.

if "pclass" in test_df.columns:

print("n=== Slice AUC by pclass ===")

for grp, half in test_df.groupby("pclass"):

part_proba = predictor.predict_proba(half)

part_proba = part_proba[1].values if isinstance(part_proba, pd.DataFrame) and 1 in part_proba.columns else np.asarray(part_proba).reshape(-1)

auc = roc_auc_score(half[target].values, part_proba)

print(f"pclass={grp}: AUC={auc:.4f} (n={len(half)})")

fi = predictor.feature_importance(test_df, silent=True)

print("n=== Function significance (prime 20) ===")

show(fi.head(20))We analyze mannequin habits by way of subgroup efficiency slicing and permutation-based characteristic significance. We determine how efficiency varies throughout significant segments of the info. It helps us assess robustness and interpretability earlier than deployment. Take a look at the FULL CODES here.

t0 = time.time()

refit_map = predictor.refit_full()

t_refit = time.time() - t0

print(f"nrefit_full accomplished in {t_refit:.1f}s")

print("Refit mapping (pattern):", dict(listing(refit_map.objects())[:5]))

lb_full = predictor.leaderboard(test_df, silent=True)

print("n=== Leaderboard after refit_full (prime 15) ===")

show(lb_full.head(15))

best_model = predictor.get_model_best()

full_candidates = [m for m in predictor.get_model_names() if m.endswith("_FULL")]

def bench_infer(model_name, df_in, repeats=3):

instances = []

for _ in vary(repeats):

t1 = time.time()

_ = predictor.predict(df_in, mannequin=model_name)

instances.append(time.time() - t1)

return float(np.median(instances))

small_batch = test_df.drop(columns=[target]).head(256)

lat_best = bench_infer(best_model, small_batch)

print(f"nBest mannequin: {best_model} | median predict() latency on 256 rows: {lat_best:.4f}s")

if full_candidates:

lb_full_sorted = lb_full.sort_values(by="score_test", ascending=False)

best_full = lb_full_sorted[lb_full_sorted["model"].str.endswith("_FULL")].iloc[0]["model"]

lat_full = bench_infer(best_full, small_batch)

print(f"Finest FULL mannequin: {best_full} | median predict() latency on 256 rows: {lat_full:.4f}s")

print(f"Speedup issue (finest / full): {lat_best / max(lat_full, 1e-9):.2f}x")

strive:

t0 = time.time()

distill_result = predictor.distill(

train_data=train_df,

time_limit=4 * 60,

augment_method="spunge",

)

t_distill = time.time() - t0

print(f"nDistillation accomplished in {t_distill:.1f}s")

besides Exception as e:

print("nDistillation step failed")

print("Error:", repr(e))

lb2 = predictor.leaderboard(test_df, silent=True)

print("n=== Leaderboard after distillation try (prime 20) ===")

show(lb2.head(20))

predictor.save()

reloaded = TabularPredictor.load(save_path)

pattern = test_df.drop(columns=[target]).pattern(8, random_state=0)

sample_pred = reloaded.predict(pattern)

sample_proba = reloaded.predict_proba(pattern)

print("n=== Reloaded predictor sanity-check ===")

print(pattern.assign(pred=sample_pred).head())

print("nProbabilities (head):")

show(sample_proba.head())

artifacts = {

"path": save_path,

"presets": presets,

"best_model": reloaded.get_model_best(),

"model_names": reloaded.get_model_names(),

"leaderboard_top10": lb2.head(10).to_dict(orient="information"),

}

with open(os.path.be a part of(save_path, "run_summary.json"), "w") as f:

json.dump(artifacts, f, indent=2)

print("nSaved abstract to:", os.path.be a part of(save_path, "run_summary.json"))

print("Carried out.")We optimize the educated ensemble for inference by collapsing bagged fashions and benchmarking latency enhancements. We optionally distill the ensemble into sooner fashions and validate persistence by way of save-reload checks. Additionally, we export structured artifacts required for manufacturing handoff.

In conclusion, we carried out an end-to-end workflow with AutoGluon that transforms uncooked tabular knowledge into production-ready fashions with minimal guide intervention, whereas sustaining sturdy management over accuracy, robustness, and inference effectivity. We carried out systematic error evaluation and have significance analysis, optimized giant ensembles by way of refitting and distillation, and validated deployment readiness utilizing latency benchmarking and artifact packaging. This workflow allows the deployment of high-performing, scalable, interpretable, and well-suited tabular fashions for real-world manufacturing environments.

Take a look at the FULL CODES here. Additionally, be happy to observe us on Twitter and don’t neglect to hitch our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.