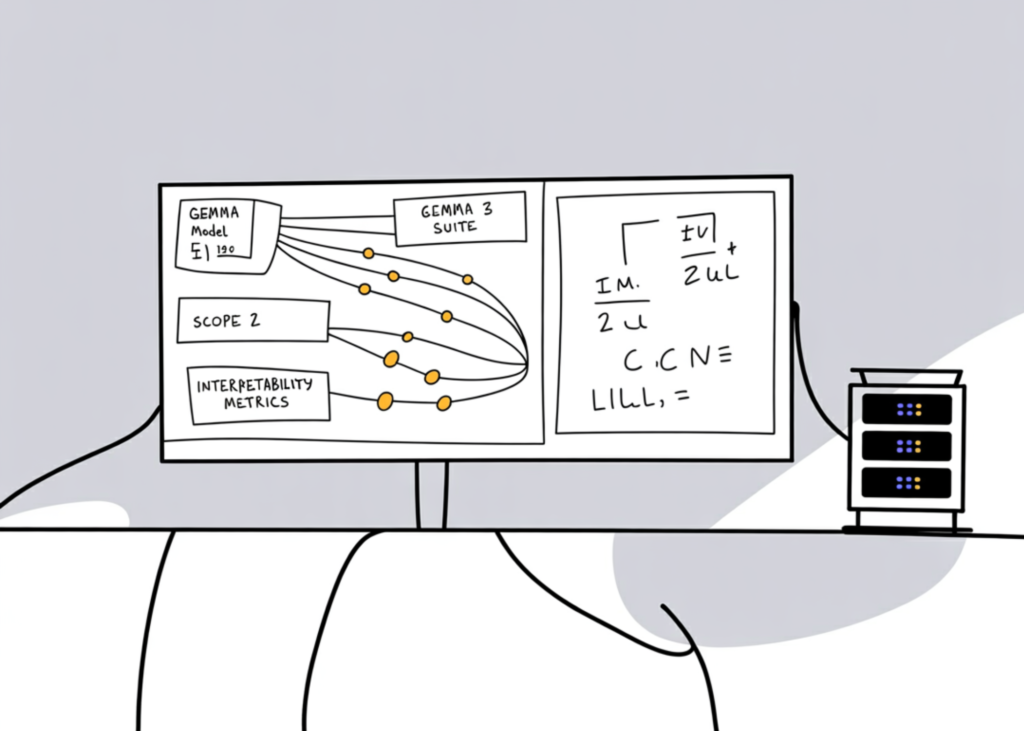

Google DeepMind Researchers introduce Gemma Scope 2, an open suite of interpretability instruments that exposes how Gemma 3 language fashions course of and symbolize data throughout all layers, from 270M to 27B parameters.

Its core objective is straightforward, give AI security and alignment groups a sensible strategy to hint mannequin conduct again to inner options as a substitute of relying solely on enter output evaluation. When a Gemma 3 mannequin jailbreaks, hallucinates or exhibits sycophantic conduct, Gemma Scope 2 lets researchers examine which inner options fired and the way these activations flowed by means of the community.

What’s Gemma Scope 2?

Gemma Scope 2 is a complete, open suite of sparse autoencoders and associated instruments educated on inner activations of the Gemma 3 mannequin household. Sparse autoencoders, SAEs, act as a microscope on the mannequin. They decompose excessive dimensional activations right into a sparse set of human inspectable options that correspond to ideas or behaviors.

Coaching Gemma Scope 2 required storing round 110 Petabytes of activation knowledge and becoming over 1 trillion whole parameters throughout all interpretability fashions.

The suite targets each Gemma 3 variant, together with 270M, 1B, 4B, 12B and 27B parameter fashions, and covers the complete depth of the community. That is vital as a result of many security related behaviors solely seem at bigger scales.

What’s new in comparison with the unique Gemma Scope?

The primary Gemma Scope launch centered on Gemma 2 and already enabled analysis on mannequin hallucination, figuring out secrets and techniques recognized by a mannequin and coaching safer fashions.

Gemma Scope 2 extends that work in 4 essential methods:

- The instruments now span the whole Gemma 3 household as much as 27B parameters, which is required to review emergent behaviors noticed solely in bigger fashions, such because the conduct beforehand analyzed within the 27B dimension C2S Scale mannequin for scientific discovery duties.

- Gemma Scope 2 consists of SAEs and transcoders educated on each layer of Gemma 3. Skip transcoders and cross layer transcoders assist hint multi step computations which might be distributed throughout layers.

- The suite applies the Matryoshka coaching approach in order that SAEs study extra helpful and secure options and mitigate some flaws recognized within the earlier Gemma Scope launch.

- There are devoted interpretability instruments for Gemma 3 fashions tuned for chat, which make it potential to research multi step behaviors corresponding to jailbreaks, refusal mechanisms and chain of thought faithfulness.

Key Takeaways

- Gemma Scope 2 is an open interpretability suite for all Gemma 3 fashions, from 270M to 27B parameters, with SAEs and transcoders on each layer of each pretrained and instruction tuned variants.

- The suite makes use of sparse autoencoders as a microscope that decomposes inner activations into sparse, idea like options, plus transcoders that monitor how these options propagate throughout layers.

- Gemma Scope 2 is explicitly positioned for AI security work to review jailbreaks, hallucinations, sycophancy, refusal mechanisms and discrepancies between inner state and communicated reasoning in Gemma 3.

Take a look at the Paper, Technical details and Model Weights. Additionally, be happy to comply with us on Twitter and don’t overlook to affix our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.

Michal Sutter is a knowledge science skilled with a Grasp of Science in Knowledge Science from the College of Padova. With a strong basis in statistical evaluation, machine studying, and knowledge engineering, Michal excels at reworking complicated datasets into actionable insights.