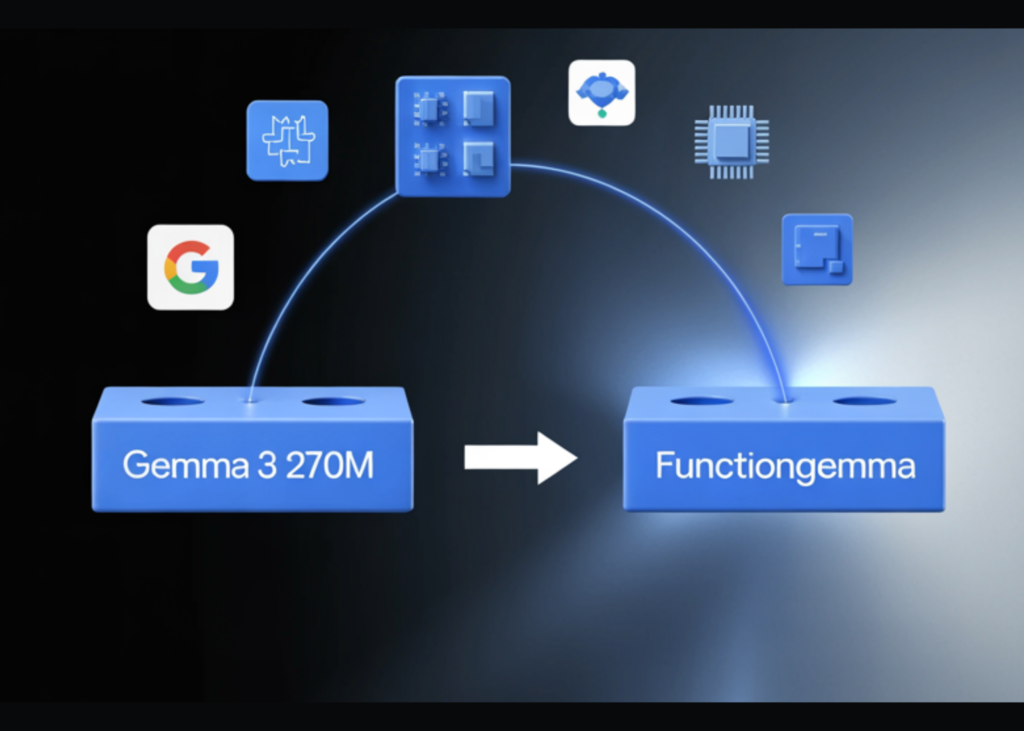

Google has launched FunctionGemma, a specialised model of the Gemma 3 270M mannequin that’s skilled particularly for operate calling and designed to run as an edge agent that maps pure language to executable API actions.

However, What’s FunctionGemma?

FunctionGemma is a 270M parameter textual content solely transformer based mostly on Gemma 3 270M. It retains the identical structure as Gemma 3 and is launched as an open mannequin below the Gemma license, however the coaching goal and chat format are devoted to operate calling quite than free kind dialogue.

The mannequin is meant to be superb tuned for particular operate calling duties. It’s not positioned as a basic chat assistant. The first design aim is to translate consumer directions and gear definitions into structured operate calls, then optionally summarize software responses for the consumer.

From an interface perspective, FunctionGemma is offered as an ordinary causal language mannequin. Inputs and outputs are textual content sequences, with an enter context of 32K tokens and an output price range of as much as 32K tokens per request, shared with the enter size.

Structure and coaching information

The mannequin makes use of the Gemma 3 transformer structure and the identical 270M parameter scale as Gemma 3 270M. The coaching and runtime stack reuse the analysis and infrastructure used for Gemini, together with JAX and ML Pathways on giant TPU clusters.

FunctionGemma makes use of Gemma’s 256K vocabulary, which is optimized for JSON constructions and multilingual textual content. This improves token effectivity for operate schemas and gear responses and reduces sequence size for edge deployments the place latency and reminiscence are tight.

The mannequin is skilled on 6T tokens, with a information cutoff in August 2024. The dataset focuses on two most important classes:

- public software and API definitions

- software use interactions that embody prompts, operate calls, operate responses and pure language comply with up messages that summarize outputs or request clarification

This coaching sign teaches each syntax, which operate to name and easy methods to format arguments, and intent, when to name a operate and when to ask for extra info.

Dialog format and management tokens

FunctionGemma doesn’t use a free kind chat format. It expects a strict dialog template that separates roles and gear associated areas. Dialog turns are wrapped with developer, consumer or mannequin.

Inside these turns, FunctionGemma depends on a hard and fast set of management token pairs

These markers let the mannequin distinguish pure language textual content from operate schemas and from execution outcomes. The Hugging Face apply_chat_template API and the official Gemma templates generate this construction robotically for messages and gear lists.

Advantageous tuning and Cellular Actions efficiency

Out of the field, FunctionGemma is already skilled for generic software use. Nonetheless, the official Cellular Actions information and the mannequin card emphasize that small fashions attain manufacturing degree reliability solely after job particular superb tuning.

The Cellular Actions demo makes use of a dataset the place every instance exposes a small set of instruments for Android system operations, for instance create a contact, set a calendar occasion, management the flashlight and map viewing. FunctionGemma learns to map utterances comparable to ‘Create a calendar occasion for lunch tomorrow’ or ‘Activate the flashlight’ to these instruments with structured arguments.

On the Cellular Actions analysis, the bottom FunctionGemma mannequin reaches 58 % accuracy on a held out check set. After superb tuning with the general public cookbook recipe, accuracy will increase to 85 %.

Edge brokers and reference demos

The principle deployment goal for FunctionGemma is edge brokers that run regionally on telephones, laptops and small accelerators comparable to NVIDIA Jetson Nano. The small parameter rely, 0.3B, and help for quantization enable inference with low reminiscence and low latency on shopper {hardware}.

Google ships a number of reference experiences by the Google AI Edge Gallery

- Cellular Actions reveals a completely offline assistant model agent for system management utilizing FunctionGemma superb tuned on the Cellular Actions dataset and deployed on system.

- Tiny Backyard is a voice managed recreation the place the mannequin decomposes instructions comparable to “Plant sunflowers within the prime row and water them” into area particular capabilities like

plant_seedandwater_plotswith express grid coordinates. - FunctionGemma Physics Playground runs solely within the browser utilizing Transformers.js and lets customers clear up physics puzzles by way of pure language directions that the mannequin converts into simulation actions.

These demos validate {that a} 270M parameter operate caller can help multi step logic on system with out server calls, given acceptable superb tuning and gear interfaces.

Key Takeaways

- FunctionGemma is a 270M parameter, textual content solely variant of Gemma 3 that’s skilled particularly for operate calling, not for open ended chat, and is launched as an open mannequin below the Gemma phrases of use.

- The mannequin retains the Gemma 3 transformer structure and 256k token vocabulary, helps 32k tokens per request shared between enter and output, and is skilled on 6T tokens.

- FunctionGemma makes use of a strict chat template with

function ... - On the Cellular Actions benchmark, accuracy improves from 58 % for the bottom mannequin to 85 % after job particular superb tuning, exhibiting that small operate callers want area information greater than immediate engineering.

- The 270M scale and quantization help let FunctionGemma run on telephones, laptops and Jetson class units, and the mannequin is already built-in into ecosystems comparable to Hugging Face, Vertex AI, LM Studio and edge demos like Cellular Actions, Tiny Backyard and the Physics Playground.

Try the Technical details and Model on HF. Additionally, be happy to comply with us on Twitter and don’t overlook to hitch our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its recognition amongst audiences.