On this tutorial, we stroll by way of a complicated but sensible workflow utilizing SpeechBrain. We begin by producing our personal clear speech samples with gTTS, intentionally including noise to simulate real-world eventualities, after which making use of SpeechBrain’s MetricGAN+ mannequin to reinforce the audio. As soon as the audio is denoised, we run computerized speech recognition with a language mannequin–rescored CRDNN system and evaluate the phrase error charges earlier than and after enhancement. By taking this step-by-step strategy, we are able to expertise firsthand how SpeechBrain allows us to construct a whole pipeline for speech enhancement and recognition in just some traces of code. Take a look at the FULL CODES here.

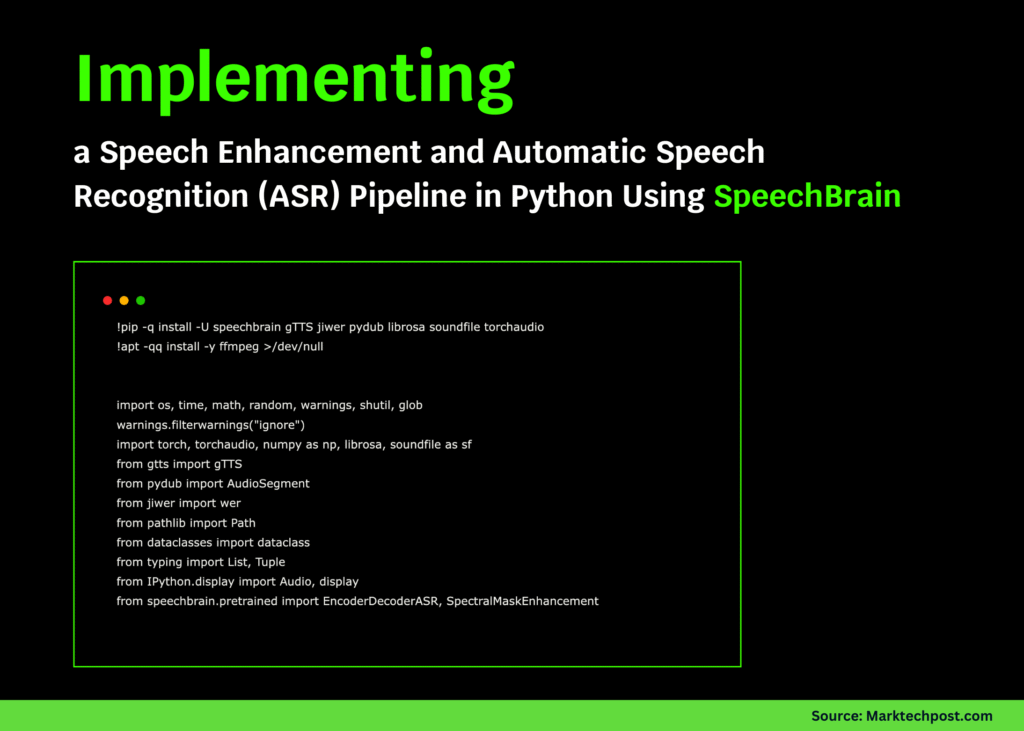

!pip -q set up -U speechbrain gTTS jiwer pydub librosa soundfile torchaudio

!apt -qq set up -y ffmpeg >/dev/null

import os, time, math, random, warnings, shutil, glob

warnings.filterwarnings("ignore")

import torch, torchaudio, numpy as np, librosa, soundfile as sf

from gtts import gTTS

from pydub import AudioSegment

from jiwer import wer

from pathlib import Path

from dataclasses import dataclass

from typing import Checklist, Tuple

from IPython.show import Audio, show

from speechbrain.pretrained import EncoderDecoderASR, SpectralMaskEnhancement

root = Path("sb_demo"); root.mkdir(exist_ok=True)

sr = 16000

system = "cuda" if torch.cuda.is_available() else "cpu"We start by establishing our Colab atmosphere with all of the required libraries and instruments. We set up SpeechBrain together with audio processing packages, outline fundamental paths and parameters, and put together the system so we’re able to construct our speech pipeline. Take a look at the FULL CODES here.

def tts_to_wav(textual content: str, out_wav: str, lang="en"):

mp3 = out_wav.exchange(".wav", ".mp3")

gTTS(textual content=textual content, lang=lang).save(mp3)

a = AudioSegment.from_file(mp3, format="mp3").set_channels(1).set_frame_rate(sr)

a.export(out_wav, format="wav")

os.take away(mp3)

def add_noise(in_wav: str, snr_db: float, out_wav: str):

y, _ = librosa.load(in_wav, sr=sr, mono=True)

rms = np.sqrt(np.imply(y**2) + 1e-12)

n = np.random.regular(0, 1, len(y))

n = n / (np.sqrt(np.imply(n**2)+1e-12))

target_n_rms = rms / (10**(snr_db/20))

y_noisy = np.clip(y + n * target_n_rms, -1.0, 1.0)

sf.write(out_wav, y_noisy, sr)

def play(title, path):

print(f"▶ {title}: {path}")

show(Audio(path, charge=sr))

def clean_txt(s: str) -> str:

return " ".be a part of("".be a part of(ch.decrease() if ch.isalnum() or ch.isspace() else " " for ch in s).break up())

@dataclass

class Pattern:

textual content: str

clean_wav: str

noisy_wav: str

enhanced_wav: strWe outline small utilities that energy our pipeline from finish to finish. We synthesize speech with gTTS and convert it to WAV, inject managed Gaussian noise at a goal SNR, and add helpers to preview audio and normalize textual content. We additionally create a Pattern dataclass so we neatly monitor every utterance’s clear, noisy, and enhanced paths. Take a look at the FULL CODES here.

sentences = [

"Artificial intelligence is transforming everyday life.",

"Open source tools enable rapid research and innovation.",

"SpeechBrain brings flexible speech pipelines to Python."

]

samples: Checklist[Sample] = []

print("🗣️ Synthesizing brief utterances with gTTS...")

for i, s in enumerate(sentences, 1):

cw = str(root/f"clean_{i}.wav")

nw = str(root/f"noisy_{i}.wav")

ew = str(root/f"enhanced_{i}.wav")

tts_to_wav(s, cw)

add_noise(cw, snr_db=3.0 if ipercent2 else 0.0, out_wav=nw)

samples.append(Pattern(textual content=s, clean_wav=cw, noisy_wav=nw, enhanced_wav=ew))

play("Clear #1", samples[0].clean_wav)

play("Noisy #1", samples[0].noisy_wav)

print("⬇️ Loading pretrained fashions (this downloads as soon as) ...")

asr = EncoderDecoderASR.from_hparams(

supply="speechbrain/asr-crdnn-rnnlm-librispeech",

run_opts={"system": system},

savedir=str(root/"pretrained_asr"),

)

enhancer = SpectralMaskEnhancement.from_hparams(

supply="speechbrain/metricgan-plus-voicebank",

run_opts={"system": system},

savedir=str(root/"pretrained_enh"),

)

On this step, we generate three spoken sentences with gTTS, save each clear and noisy variations, and manage them into our Pattern objects. We then load SpeechBrain’s pre-trained ASR and MetricGAN+ enhancement fashions, offering us with all the required elements to rework noisy audio right into a denoised transcription. Take a look at the FULL CODES here.

def enhance_file(in_wav: str, out_wav: str):

sig = enhancer.enhance_file(in_wav)

if sig.dim() == 1: sig = sig.unsqueeze(0)

torchaudio.save(out_wav, sig.cpu(), sr)

def transcribe(path: str) -> str:

hyp = asr.transcribe_file(path)

return clean_txt(hyp)

def eval_pair(ref_text: str, wav_path: str) -> Tuple[str, float]:

hyp = transcribe(wav_path)

return hyp, wer(clean_txt(ref_text), hyp)

print("n🔬 Transcribing noisy vs enhanced (MetricGAN+)...")

rows = []

t0 = time.time()

for smp in samples:

enhance_file(smp.noisy_wav, smp.enhanced_wav)

hyp_noisy, wer_noisy = eval_pair(smp.textual content, smp.noisy_wav)

hyp_enh, wer_enh = eval_pair(smp.textual content, smp.enhanced_wav)

rows.append((smp.textual content, hyp_noisy, wer_noisy, hyp_enh, wer_enh))

t1 = time.time()We create helper capabilities to reinforce noisy audio, transcribe speech, and consider WER in opposition to the reference textual content. We then run these steps throughout all our samples, evaluating noisy and enhanced variations, and report each transcriptions and error charges together with the processing time. Take a look at the FULL CODES here.

def fmt(x): return f"{x:.3f}" if isinstance(x, float) else x

print(f"n⏱️ Inference time: {t1 - t0:.2f}s on {system.higher()}")

print("n# ---- Outcomes (Noisy → Enhanced) ----")

for i, (ref, hN, wN, hE, wE) in enumerate(rows, 1):

print(f"nUtterance {i}")

print("Ref: ", ref)

print("Noisy ASR:", hN)

print("WER noisy:", fmt(wN))

print("Enh ASR: ", hE)

print("WER enh: ", fmt(wE))

print("n🧵 Batch decoding (looping API):")

batch_files = [s.clean_wav for s in samples] + [s.noisy_wav for s in samples]

bt0 = time.time()

batch_hyps = [transcribe(p) for p in batch_files]

bt1 = time.time()

for p, h in zip(batch_files, batch_hyps):

print(os.path.basename(p), "->", h[:80] + ("..." if len(h) > 80 else ""))

print(f"⏱️ Batch elapsed: {bt1 - bt0:.2f}s")

play("Enhanced #1 (MetricGAN+)", samples[0].enhanced_wav)

avg_wn = sum(wN for _,_,wN,_,_ in rows) / len(rows)

avg_we = sum(wE for _,_,_,_,wE in rows) / len(rows)

print("n📈 Abstract:")

print(f"Avg WER (Noisy): {avg_wn:.3f}")

print(f"Avg WER (Enhanced): {avg_we:.3f}")

print("Tip: Strive totally different SNRs or longer texts, and swap system to GPU if accessible.")We summarize our experiment by timing inference, printing per-utterance transcriptions, and contrasting WER earlier than and after enhancement. We additionally batch-decode a number of recordsdata, hearken to an enhanced pattern, and report common WERs so we clearly see the positive factors from MetricGAN+ in our pipeline.

In conclusion, we clearly see the ability of integrating speech enhancement and ASR right into a unified pipeline with SpeechBrain. By producing audio, corrupting it with noise, enhancing it, and at last transcribing it, we acquire hands-on insights into how these fashions enhance recognition accuracy in noisy environments. The outcomes spotlight the sensible advantages of utilizing open-source speech applied sciences. We conclude with a working framework that may be simply prolonged for bigger datasets, totally different enhancement fashions, or customized ASR duties.

Take a look at the FULL CODES here. Be at liberty to take a look at our GitHub Page for Tutorials, Codes and Notebooks. Additionally, be happy to comply with us on Twitter and don’t overlook to hitch our 100k+ ML SubReddit and Subscribe to our Newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its recognition amongst audiences.