On this tutorial, we construct a complicated voice AI agent utilizing Hugging Face’s freely accessible fashions, and we hold your entire pipeline easy sufficient to run easily on Google Colab. We mix Whisper for speech recognition, FLAN-T5 for pure language reasoning, and Bark for speech synthesis, all linked via transformers pipelines. By doing this, we keep away from heavy dependencies, API keys, or difficult setups, and we give attention to displaying how we are able to flip voice enter into significant dialog and get again natural-sounding voice responses in actual time. Take a look at the FULL CODES here.

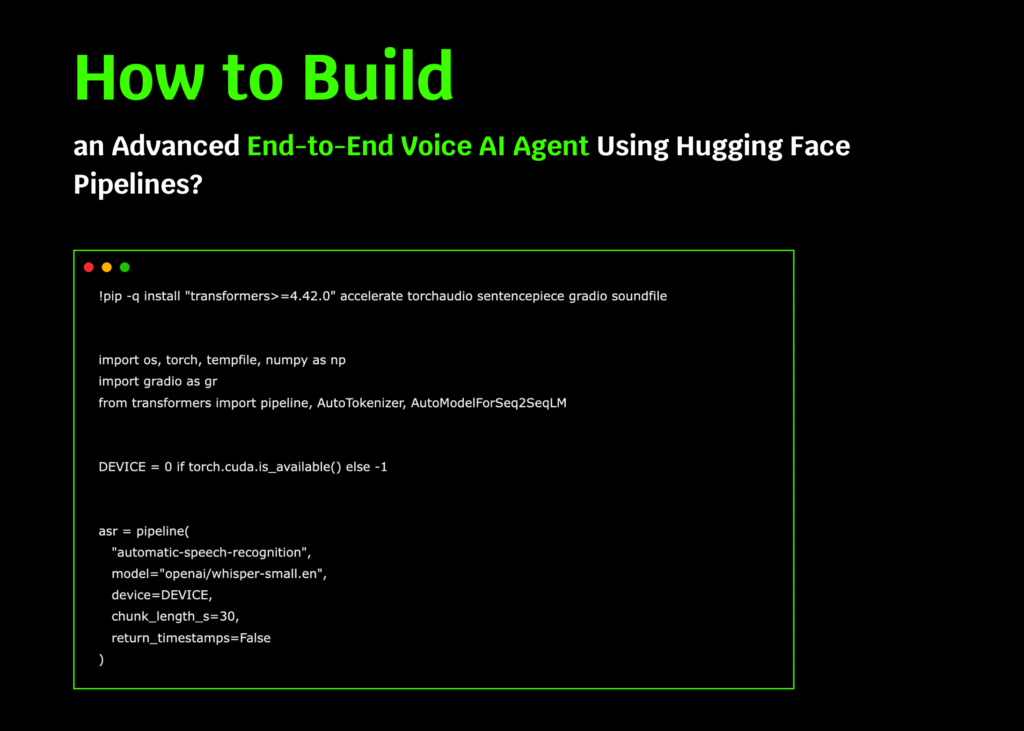

!pip -q set up "transformers>=4.42.0" speed up torchaudio sentencepiece gradio soundfile

import os, torch, tempfile, numpy as np

import gradio as gr

from transformers import pipeline, AutoTokenizer, AutoModelForSeq2SeqLM

DEVICE = 0 if torch.cuda.is_available() else -1

asr = pipeline(

"automatic-speech-recognition",

mannequin="openai/whisper-small.en",

system=DEVICE,

chunk_length_s=30,

return_timestamps=False

)

LLM_MODEL = "google/flan-t5-base"

tok = AutoTokenizer.from_pretrained(LLM_MODEL)

llm = AutoModelForSeq2SeqLM.from_pretrained(LLM_MODEL, device_map="auto")

tts = pipeline("text-to-speech", mannequin="suno/bark-small")We set up the required libraries and cargo three Hugging Face pipelines: Whisper for speech-to-text, FLAN-T5 for producing responses, and Bark for text-to-speech. We set the system mechanically in order that we are able to use GPU if accessible. Take a look at the FULL CODES here.

SYSTEM_PROMPT = (

"You're a useful, concise voice assistant. "

"Desire direct, structured solutions. "

"If the person asks for steps or code, use quick bullet factors."

)

def format_dialog(historical past, user_text):

turns = []

for u, a in historical past:

if u: turns.append(f"Person: {u}")

if a: turns.append(f"Assistant: {a}")

turns.append(f"Person: {user_text}")

immediate = (

"Instruction:n"

f"{SYSTEM_PROMPT}nn"

"Dialog to this point:n" + "n".be a part of(turns) + "nn"

"Assistant:"

)

return immediateWe outline a system immediate that guides our agent to remain concise and structured, and we implement a format_dialog operate that takes previous dialog historical past together with the person enter and builds a immediate string for the mannequin to generate the assistant’s reply. Take a look at the FULL CODES here.

def transcribe(filepath):

out = asr(filepath)

textual content = out["text"].strip()

return textual content

def generate_reply(historical past, user_text, max_new_tokens=256):

immediate = format_dialog(historical past, user_text)

inputs = tok(immediate, return_tensors="pt", truncation=True).to(llm.system)

with torch.no_grad():

ids = llm.generate(

**inputs,

max_new_tokens=max_new_tokens,

temperature=0.7,

do_sample=True,

top_p=0.9,

repetition_penalty=1.05,

)

reply = tok.decode(ids[0], skip_special_tokens=True).strip()

return reply

def synthesize_speech(textual content):

out = tts(textual content)

audio = out["audio"]

sr = out["sampling_rate"]

audio = np.asarray(audio, dtype=np.float32)

return (sr, audio)We create three core capabilities for our voice agent: transcribe converts recorded audio into textual content utilizing Whisper, generate_reply builds a context-aware response from FLAN-T5, and synthesize_speech turns that response again into spoken audio with Bark. Take a look at the FULL CODES here.

def clear_history():

return [], []

def voice_to_voice(mic_file, historical past):

historical past = historical past or []

if not mic_file:

return historical past, None, "Please report one thing!"

attempt:

user_text = transcribe(mic_file)

besides Exception as e:

return historical past, None, f"ASR error: {e}"

if not user_text:

return historical past, None, "Did not catch that. Strive once more?"

attempt:

reply = generate_reply(historical past, user_text)

besides Exception as e:

return historical past, None, f"LLM error: {e}"

attempt:

sr, wav = synthesize_speech(reply)

besides Exception as e:

return historical past + [(user_text, reply)], None, f"TTS error: {e}"

return historical past + [(user_text, reply)], (sr, wav), f"Person: {user_text}nAssistant: {reply}"

def text_to_voice(user_text, historical past):

historical past = historical past or []

user_text = (user_text or "").strip()

if not user_text:

return historical past, None, "Sort a message first."

attempt:

reply = generate_reply(historical past, user_text)

sr, wav = synthesize_speech(reply)

besides Exception as e:

return historical past, None, f"Error: {e}"

return historical past + [(user_text, reply)], (sr, wav), f"Person: {user_text}nAssistant: {reply}"

def export_chat(historical past):

strains = []

for u, a in historical past or []:

strains += [f"User: {u}", f"Assistant: {a}", ""]

textual content = "n".be a part of(strains).strip() or "No dialog but."

with tempfile.NamedTemporaryFile(delete=False, suffix=".txt", mode="w") as f:

f.write(textual content)

path = f.identify

return pathWe add interactive capabilities for our agent: clear_history resets the dialog, voice_to_voice handles speech enter and returns a spoken reply, text_to_voice processes typed enter and speaks again, and export_chat saves your entire dialog right into a downloadable textual content file. Take a look at the FULL CODES here.

with gr.Blocks(title="Superior Voice AI Agent (HF Pipelines)") as demo:

gr.Markdown(

"## 🎙️ Superior Voice AI Agent (Hugging Face Pipelines Solely)n"

"- **ASR**: openai/whisper-small.enn"

"- **LLM**: google/flan-t5-basen"

"- **TTS**: suno/bark-smalln"

"Communicate or kind; the agent replies with voice + textual content."

)

with gr.Row():

with gr.Column(scale=1):

mic = gr.Audio(sources=["microphone"], kind="filepath", label="File")

say_btn = gr.Button("🎤 Communicate")

text_in = gr.Textbox(label="Or kind as a substitute", placeholder="Ask me something…")

text_btn = gr.Button("💬 Ship")

export_btn = gr.Button("⬇️ Export Chat (.txt)")

reset_btn = gr.Button("♻️ Reset")

with gr.Column(scale=1):

audio_out = gr.Audio(label="Assistant Voice", autoplay=True)

transcript = gr.Textbox(label="Transcript", strains=6)

chat = gr.Chatbot(peak=360)

state = gr.State([])

def update_chat(historical past):

return [(u, a) for u, a in (history or [])]

say_btn.click on(voice_to_voice, [mic, state], [state, audio_out, transcript]).then(

update_chat, inputs=state, outputs=chat

)

text_btn.click on(text_to_voice, [text_in, state], [state, audio_out, transcript]).then(

update_chat, inputs=state, outputs=chat

)

reset_btn.click on(clear_history, None, [chat, state])

export_btn.click on(export_chat, state, gr.File(label="Obtain chat.txt"))

demo.launch(debug=False)We construct a clear Gradio UI that lets us converse or kind after which hear the agent’s response. We wire buttons to our callbacks, keep chat state, and stream outcomes right into a chatbot, transcript, and audio participant, all launched in a single Colab app.

In conclusion, we see how seamlessly Hugging Face pipelines allow us to create a voice-driven conversational agent that listens, thinks, and responds. We now have a working demo that captures audio, transcribes it, generates clever responses, and returns speech output, all inside Colab. With this basis, we are able to experiment with bigger fashions, add multilingual assist, and even lengthen the system with customized logic. Nonetheless, the core thought stays the identical: we are able to carry collectively ASR, LLM, and TTS into one easy workflow for an interactive voice AI expertise.

Take a look at the FULL CODES here. Be happy to take a look at our GitHub Page for Tutorials, Codes and Notebooks. Additionally, be at liberty to observe us on Twitter and don’t overlook to hitch our 100k+ ML SubReddit and Subscribe to our Newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its recognition amongst audiences.