Retrieval-Augmented Era (RAG) methods typically depend on dense embedding fashions that map queries and paperwork into fixed-dimensional vector areas. Whereas this strategy has turn out to be the default for a lot of AI functions, a latest analysis from Google DeepMind crew explains a elementary architectural limitation that can not be solved by bigger fashions or higher coaching alone.

What Is the Theoretical Restrict of Embedding Dimensions?

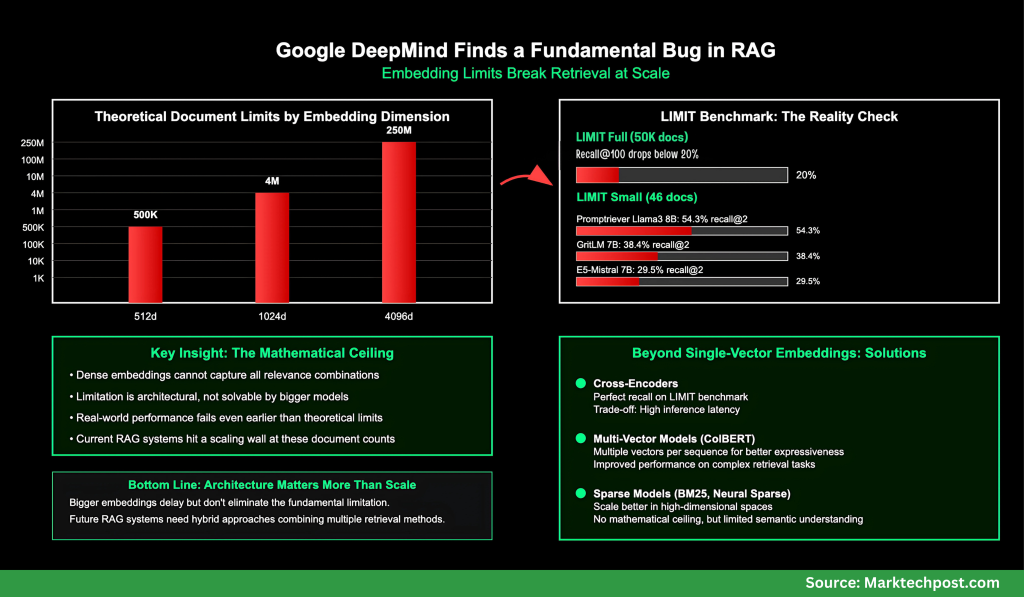

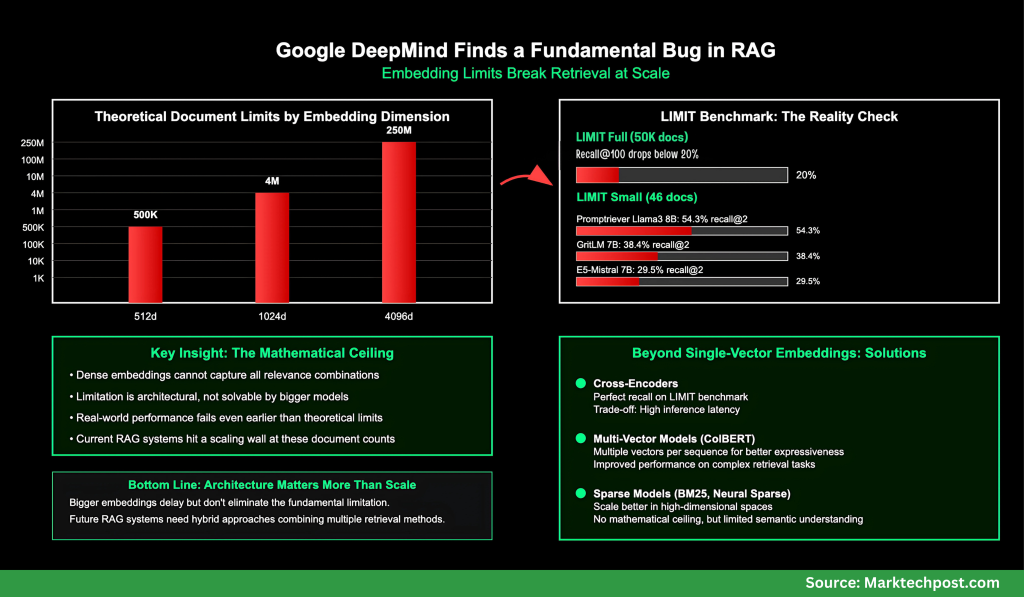

On the core of the problem is the representational capability of fixed-size embeddings. An embedding of dimension d can not symbolize all potential mixtures of related paperwork as soon as the database grows past a essential measurement. This follows from ends in communication complexity and sign-rank principle.

- For embeddings of measurement 512, retrieval breaks down round 500K paperwork.

- For 1024 dimensions, the restrict extends to about 4 million paperwork.

- For 4096 dimensions, the theoretical ceiling is 250 million paperwork.

These values are best-case estimates derived below free embedding optimization, the place vectors are immediately optimized in opposition to check labels. Actual-world language-constrained embeddings fail even earlier.

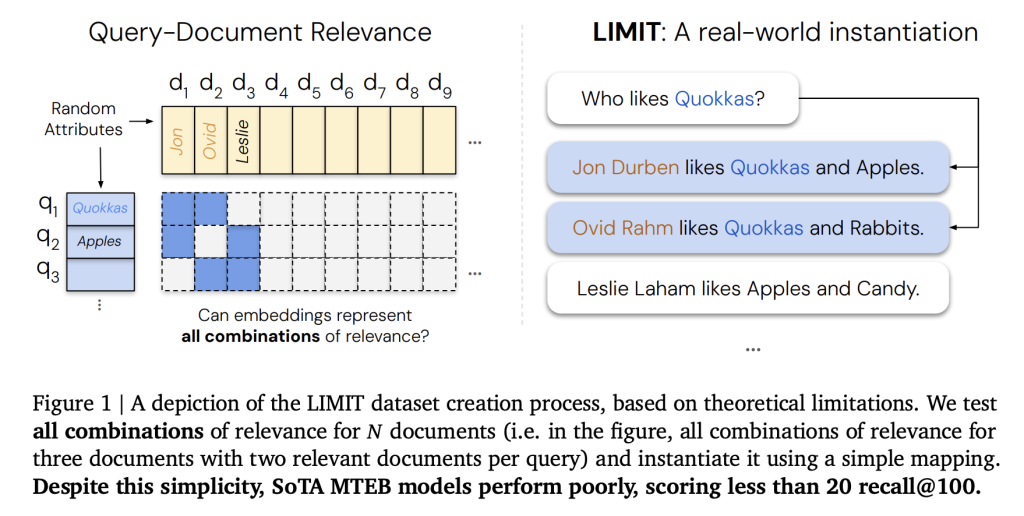

How Does the LIMIT Benchmark Expose This Drawback?

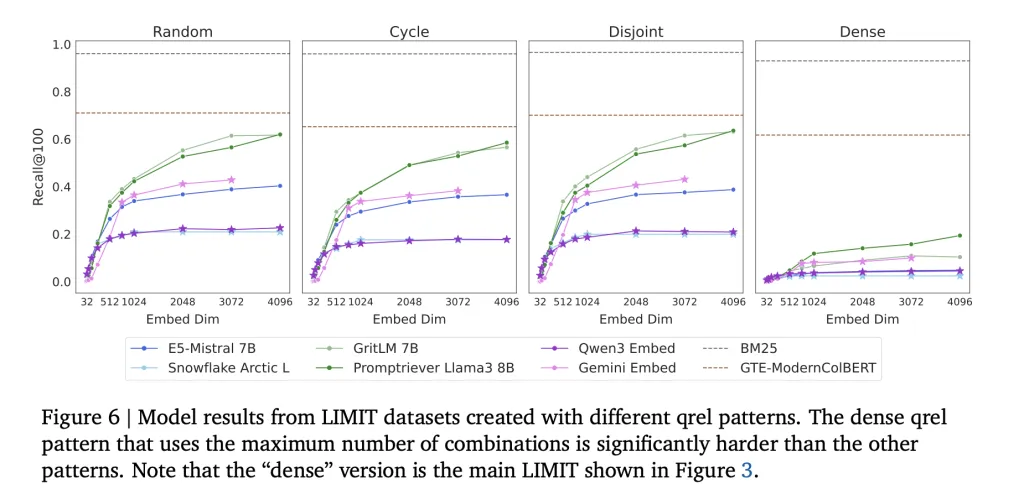

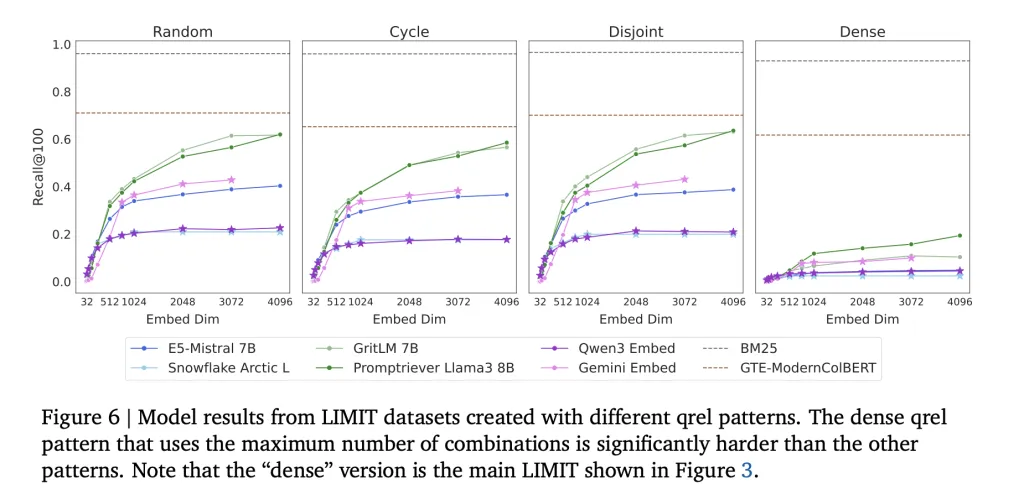

To check this limitation empirically, Google DeepMind Staff launched LIMIT (Limitations of Embeddings in Data Retrieval), a benchmark dataset particularly designed to stress-test embedders. LIMIT has two configurations:

- LIMIT full (50K paperwork): On this large-scale setup, even robust embedders collapse, with recall@100 usually falling under 20%.

- LIMIT small (46 paperwork): Regardless of the simplicity of this toy-sized setup, fashions nonetheless fail to resolve the duty. Efficiency varies extensively however stays removed from dependable:

- Promptriever Llama3 8B: 54.3% recall@2 (4096d)

- GritLM 7B: 38.4% recall@2 (4096d)

- E5-Mistral 7B: 29.5% recall@2 (4096d)

- Gemini Embed: 33.7% recall@2 (3072d)

Even with simply 46 paperwork, no embedder reaches full recall, highlighting that the limitation just isn’t dataset measurement alone however the single-vector embedding structure itself.

In distinction, BM25, a classical sparse lexical mannequin, doesn’t undergo from this ceiling. Sparse fashions function in successfully unbounded dimensional areas, permitting them to seize mixtures that dense embeddings can not.

Why Does This Matter for RAG?

CCurrent RAG implementations usually assume that embeddings can scale indefinitely with extra information. The Google DeepMind analysis crew explains how this assumption is wrong: embedding measurement inherently constrains retrieval capability. This impacts:

- Enterprise search engines like google and yahoo dealing with tens of millions of paperwork.

- Agentic methods that depend on advanced logical queries.

- Instruction-following retrieval duties, the place queries outline relevance dynamically.

Even superior benchmarks like MTEB fail to seize these limitations as a result of they check solely a slim half/part of query-document mixtures.

What Are the Alternate options to Single-Vector Embeddings?

The analysis crew advised that scalable retrieval would require transferring past single-vector embeddings:

- Cross-Encoders: Obtain good recall on LIMIT by immediately scoring query-document pairs, however at the price of excessive inference latency.

- Multi-Vector Fashions (e.g., ColBERT): Provide extra expressive retrieval by assigning a number of vectors per sequence, enhancing efficiency on LIMIT duties.

- Sparse Fashions (BM25, TF-IDF, neural sparse retrievers): Scale higher in high-dimensional search however lack semantic generalization.

The important thing perception is that architectural innovation is required, not merely bigger embedders.

What’s the Key Takeaway?

The analysis crew’s evaluation exhibits that dense embeddings, regardless of their success, are certain by a mathematical restrict: they can’t seize all potential relevance mixtures as soon as corpus sizes exceed limits tied to embedding dimensionality. The LIMIT benchmark demonstrates this failure concretely:

- On LIMIT full (50K docs): recall@100 drops under 20%.

- On LIMIT small (46 docs): even the perfect fashions max out at ~54% recall@2.

Classical methods like BM25, or newer architectures resembling multi-vector retrievers and cross-encoders, stay important for constructing dependable retrieval engines at scale.

Take a look at the PAPER here. Be at liberty to take a look at our GitHub Page for Tutorials, Codes and Notebooks. Additionally, be happy to comply with us on Twitter and don’t overlook to hitch our 100k+ ML SubReddit and Subscribe to our Newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its recognition amongst audiences.