Google introduced a significant replace to Gemini 3 Deep Suppose right now. This replace is particularly constructed to speed up trendy science, analysis, and engineering. This appears to be extra than simply one other mannequin launch. It represents a pivot towards a ‘reasoning mode’ that makes use of inner verification to resolve issues that beforehand required human skilled intervention.

The up to date mannequin is hitting benchmarks that redefine the frontier of intelligence. By specializing in test-time compute—the power of a mannequin to ‘assume’ longer earlier than producing a response—Google is transferring past easy sample matching.

Redefining AGI with 84.6% on ARC-AGI-2

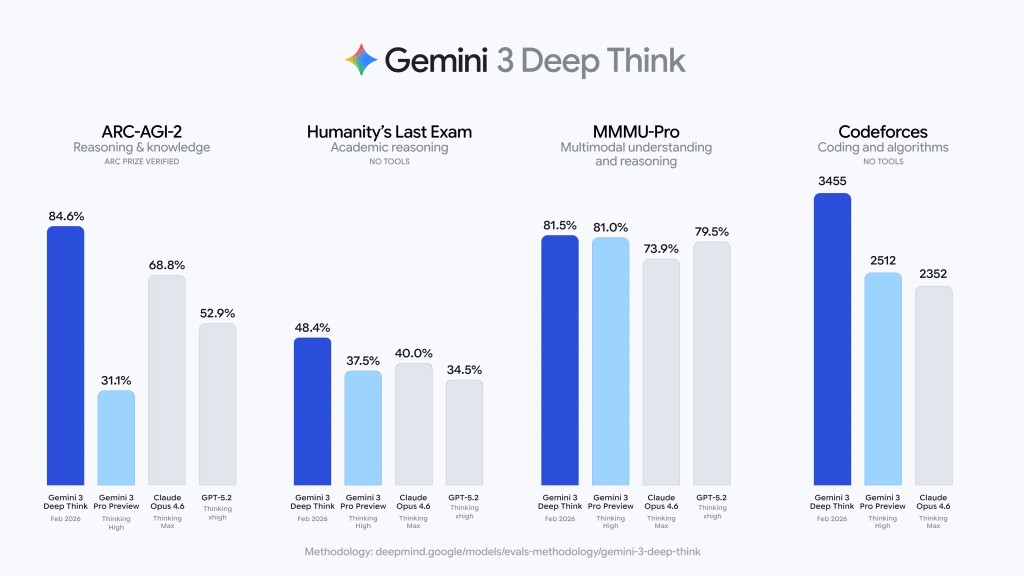

The ARC-AGI benchmark is an final take a look at of intelligence. In contrast to conventional benchmarks that take a look at memorization, ARC-AGI measures a mannequin’s skill to be taught new expertise and generalize to novel duties it has by no means seen. Google workforce reported that Gemini 3 Deep Suppose achieved 84.6% on ARC-AGI-2, a consequence verified by the ARC Prize Basis.

A rating of 84.6% is an enormous leap for the business. To place this in perspective, people common about 60% on these visible reasoning puzzles, whereas earlier AI fashions typically struggled to interrupt 20%. This implies the mannequin is not simply predicting the almost certainly subsequent phrase. It’s creating a versatile inner illustration of logic. This functionality is vital for R&D environments the place engineers take care of messy, incomplete, or novel information that doesn’t exist in a coaching set.

Passing ‘Humanity’s Final Examination‘

Google additionally set a brand new commonplace on Humanity’s Final Examination (HLE), scoring 48.4% (with out instruments). HLE is a benchmark consisting of 1000s of questions designed by subject material consultants to be straightforward for people however practically not possible for present AI. These questions span specialised tutorial subjects the place information is scarce and logic is dense.

Attaining 48.4% with out exterior search instruments is a landmark for reasoning fashions. This efficiency signifies that Gemini 3 Deep Suppose can deal with high-level conceptual planning. It may work by multi-step logical chains in fields like superior legislation, philosophy, and arithmetic with out drifting into ‘hallucinations.’ It proves that the mannequin’s inner verification programs are working successfully to prune incorrect reasoning paths.

Aggressive Coding: The 3455 Elo Milestone

Probably the most tangible replace is in aggressive programming. Gemini 3 Deep Suppose now holds a 3455 Elo rating on Codeforces. Within the coding world, a 3455 Elo places the mannequin within the ‘Legendary Grandmaster’ tier, a degree reached by solely a tiny fraction of human programmers globally.

This rating means the mannequin excels at algorithmic rigor. It may deal with advanced information buildings, optimize for time complexity, and remedy issues that require deep reminiscence administration. This mannequin serves as an elite pair programmer. It’s significantly helpful for ‘agentic coding’—the place the AI takes a high-level objective and executes a fancy, multi-file answer autonomously. In inner testing, Google workforce famous that Gemini 3 Professional confirmed 35% larger accuracy in resolving software program engineering challenges than earlier variations.

Advancing Science: Physics, Chemistry, and Math

Google’s replace is particularly tuned for scientific discovery. Gemini 3 Deep Suppose achieved gold medal-level outcomes on the written sections of the 2025 Worldwide Physics Olympiad and the 2025 Worldwide Chemistry Olympiad. It additionally reached gold-medal degree efficiency on the Worldwide Math Olympiad 2025.

Past these student-level competitions, the mannequin is acting at an expert analysis degree. It scored 50.5% on the CMT-Benchmark, which exams proficiency in superior theoretical physics. For researchers and information scientists in biotech or materials science, this implies the mannequin can help in decoding experimental information or modeling bodily programs.

Sensible Engineering and 3D Modeling

The mannequin’s reasoning isn’t simply summary; it has sensible engineering utility. A brand new functionality highlighted by Google workforce is the mannequin’s skill to show a sketch right into a 3D-printable object. Deep Suppose can analyze a 2D drawing, mannequin the advanced 3D shapes by code, and generate a remaining file for a 3D printer.

This displays the mannequin’s ‘agentic’ nature. It may bridge the hole between a visible thought and a bodily product through the use of code as a software. For engineers, this reduces the friction between design and prototyping. It additionally excels at fixing advanced optimization issues, corresponding to designing recipes for rising skinny movies in specialised chemical processes.

Key Takeaways

- Breakthrough Summary Reasoning: The mannequin achieved 84.6% on ARC-AGI-2 (verified by the ARC Prize Basis), proving it may be taught novel duties and generalize logic moderately than counting on memorized coaching information.

- Elite Coding Efficiency: With a 3455 Elo rating on Codeforces, Gemini 3 Deep Suppose performs on the ‘Legendary Grandmaster’ degree, outperforming the overwhelming majority of human aggressive programmers in algorithmic complexity and system structure.

- New Customary for Skilled Logic: It scored 48.4% on Humanity’s Final Examination (with out instruments), demonstrating the power to resolve high-level, multi-step logical chains that had been beforehand thought-about ‘too human’ for AI to resolve.

- Scientific Olympiad Success: The mannequin achieved gold medal-level outcomes on the written sections of the 2025 Worldwide Physics and Chemistry Olympiads, showcasing its capability for professional-grade analysis and sophisticated bodily modeling.

- Scaled Inference-Time Compute: In contrast to conventional LLMs, this ‘Deep Suppose’ mode makes use of test-time compute to internally confirm and self-correct its logic earlier than answering, considerably lowering technical hallucinations.

Take a look at the Technical details here. Additionally, be at liberty to comply with us on Twitter and don’t overlook to affix our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.

Michal Sutter is a knowledge science skilled with a Grasp of Science in Knowledge Science from the College of Padova. With a stable basis in statistical evaluation, machine studying, and information engineering, Michal excels at remodeling advanced datasets into actionable insights.