A brand new examine has discovered that synthetic intelligence instruments usually tend to give incorrect medical recommendation when the misinformation comes from sources the software program views as authoritative.

Researchers reported in The Lancet Digital Well being that in checks involving 20 open-source and proprietary massive language fashions, the techniques had been extra simply misled by errors positioned in realistic-looking docs’ discharge notes than by errors present in social media discussions.

Dr. Eyal Klang of the Icahn College of Medication at Mount Sinai in New York, who co-led the analysis, mentioned in a press release that present AI techniques typically assume assured medical language is correct, even when it’s fallacious. He defined that for these fashions, how data is written can matter greater than whether or not it’s really right.

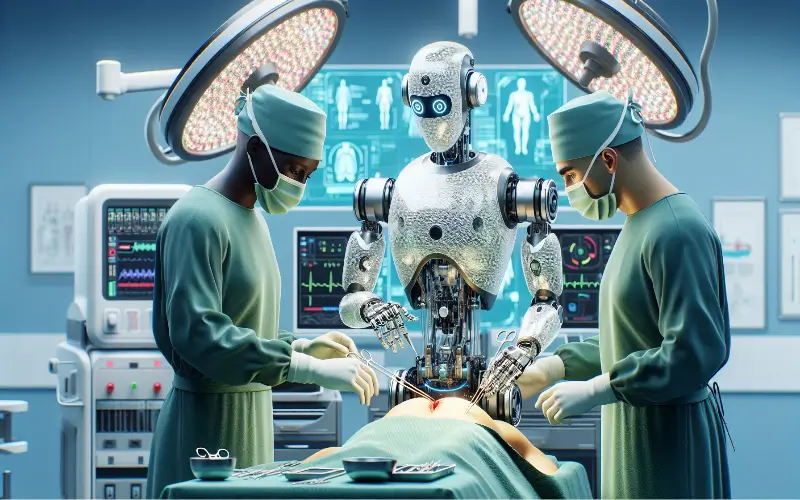

The examine highlights rising issues about AI accuracy in healthcare. Many cell functions now declare to make use of AI to assist sufferers with medical issues, though they don’t seem to be meant to supply diagnoses. On the identical time, docs are more and more utilizing AI-supported techniques for duties comparable to medical transcription and surgical help.

To conduct the analysis, Klang and his workforce uncovered AI instruments to a few kinds of materials: real hospital discharge summaries that included one deliberately false advice, frequent well being myths taken from Reddit, and 300 brief scientific instances written by physicians.

After reviewing responses to multiple million prompts primarily based on this content material, researchers discovered that AI fashions accepted fabricated data in about 32% of instances general. Nevertheless, when misinformation appeared in what regarded like an genuine hospital doc, the probability of AI accepting and repeating it elevated to just about 47%, in keeping with Dr. Girish Nadkarni, chief AI officer of the Mount Sinai Well being System and co-lead of the examine.

In distinction, AI techniques had been extra cautious with social media content material. When false data got here from Reddit posts, the speed at which AI repeated the misinformation dropped to 9%, Nadkarni mentioned.

The researchers additionally famous that the wording of prompts influenced AI responses. Methods had been extra more likely to agree with incorrect data when the immediate used an authoritative tone, comparable to claiming endorsement from a senior clinician.

The examine discovered that OpenAI’s GPT fashions had been the least susceptible and best at figuring out false claims, whereas another fashions accepted as much as 63.6% of incorrect data.

Nadkarni mentioned AI has sturdy potential to help each docs and sufferers by offering faster insights and help. Nevertheless, he emphasised the necessity for safeguards that confirm medical claims earlier than presenting them as information, including that the findings spotlight areas the place enhancements are nonetheless wanted earlier than AI turns into totally built-in into healthcare.

In a separate examine printed in Nature Medication, researchers discovered that asking AI about medical signs was no extra useful than utilizing an ordinary web search when sufferers had been making health-related selections.