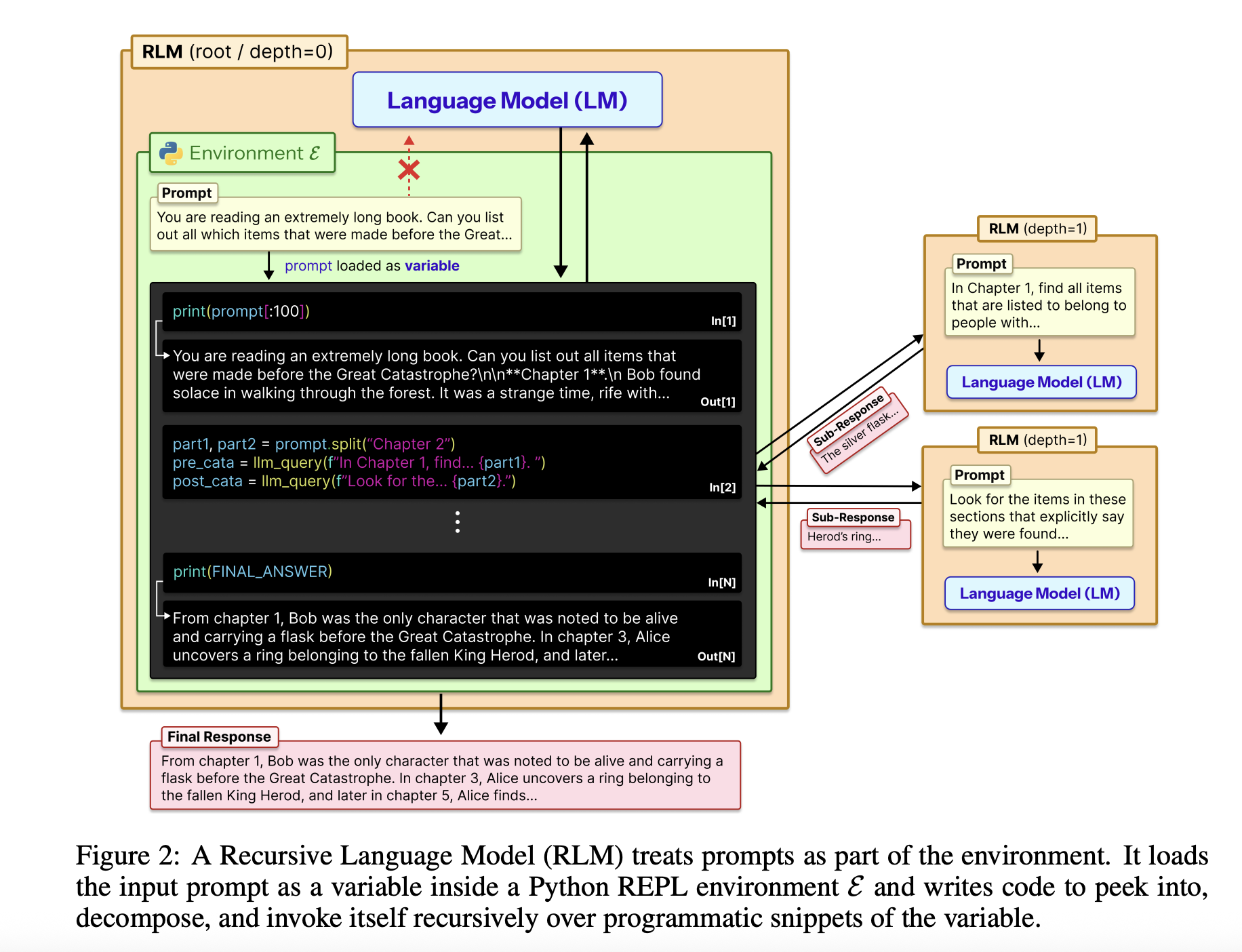

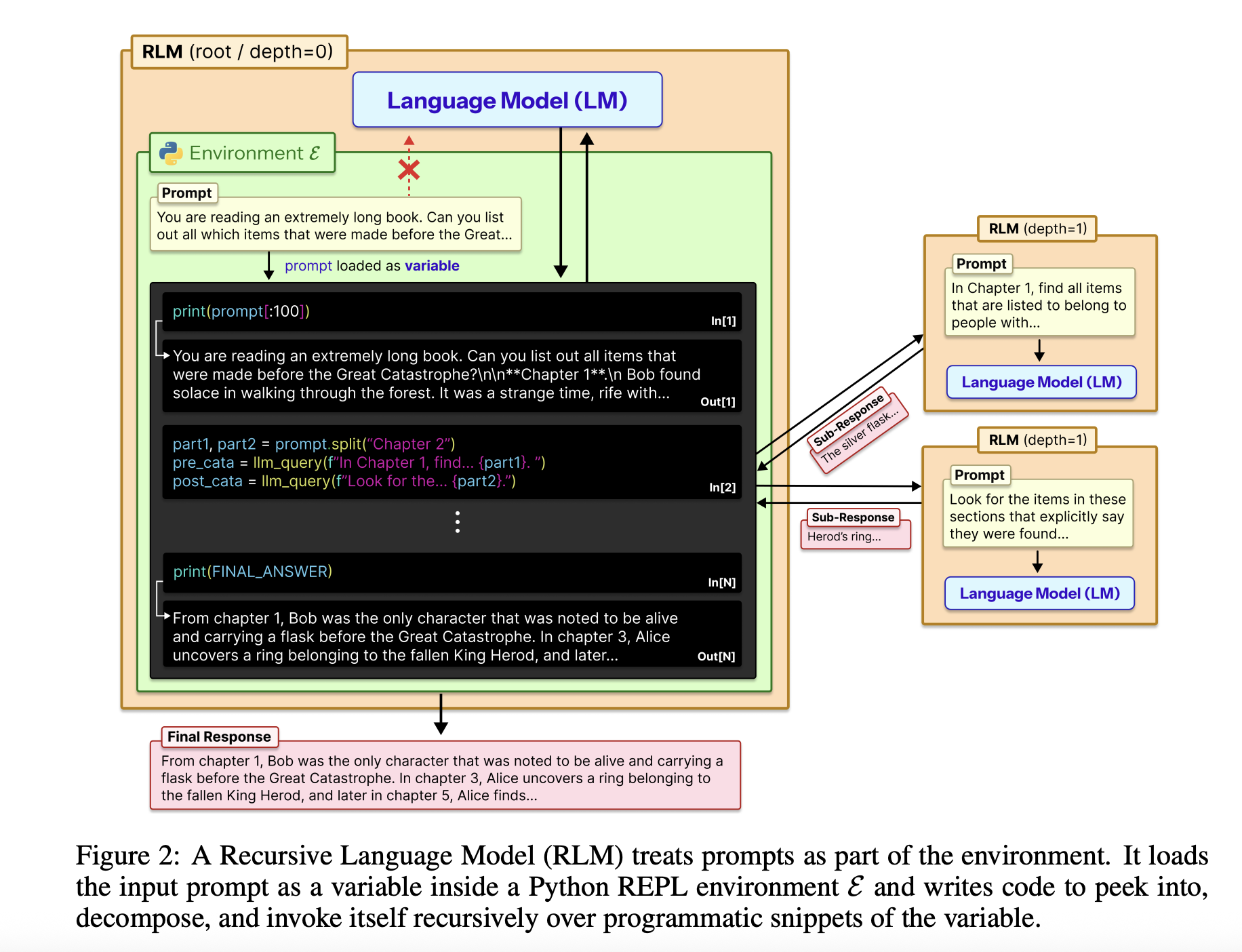

Recursive Language Models purpose to interrupt the standard commerce off between context size, accuracy and value in massive language fashions. As an alternative of forcing a mannequin to learn a large immediate in a single go, RLMs deal with the immediate as an exterior surroundings and let the mannequin determine examine it with code, then recursively name itself on smaller items.

The Fundamentals

The complete enter is loaded right into a Python REPL as a single string variable. The foundation mannequin, for instance GPT-5, by no means sees that string instantly in its context. As an alternative, it receives a system immediate that explains learn slices of the variable, write helper capabilities, spawn sub LLM calls, and mix outcomes. The mannequin returns a ultimate textual content reply, so the exterior interface stays similar to an ordinary chat completion endpoint.

The RLM design makes use of the REPL as a management airplane for lengthy context. The surroundings, normally written in Python, exposes instruments resembling string slicing, regex search and helper capabilities like llm_query that decision a smaller mannequin occasion, for instance GPT-5-mini. The foundation mannequin writes code that calls these helpers to scan, partition and summarize the exterior context variable. The code can retailer intermediate ends in variables and construct up the ultimate reply step-by-step. This construction makes the immediate measurement unbiased from the mannequin context window and turns lengthy context dealing with right into a program synthesis downside.

The place it stands in Analysis?

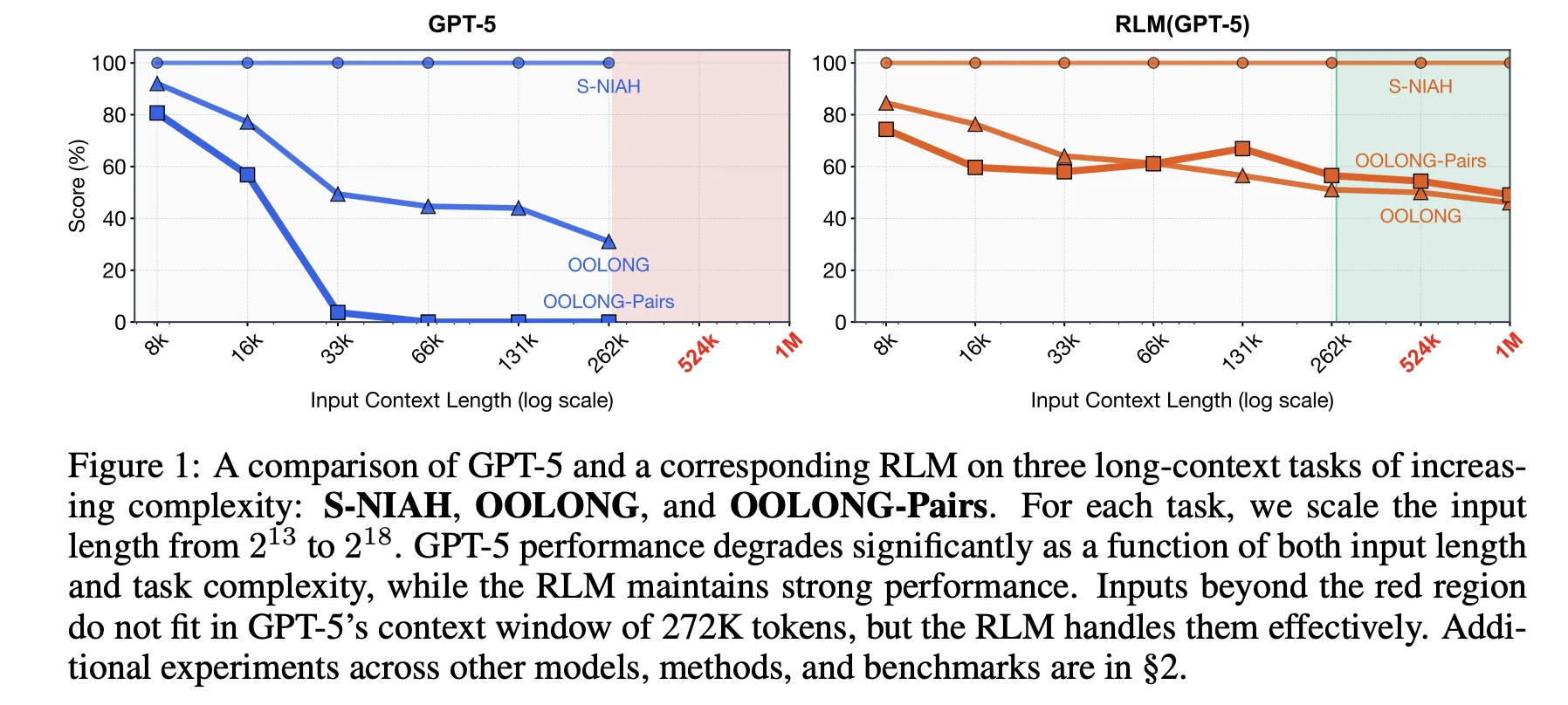

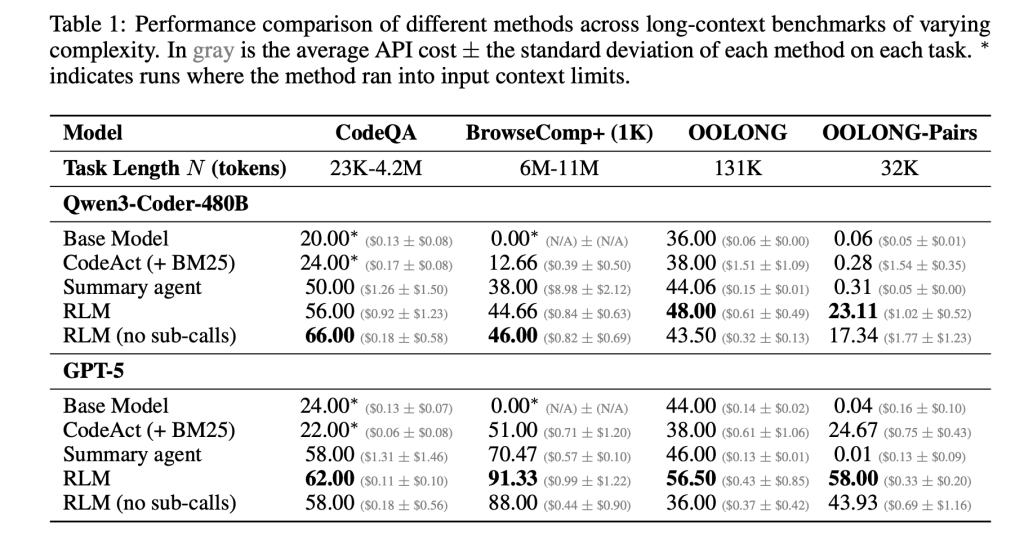

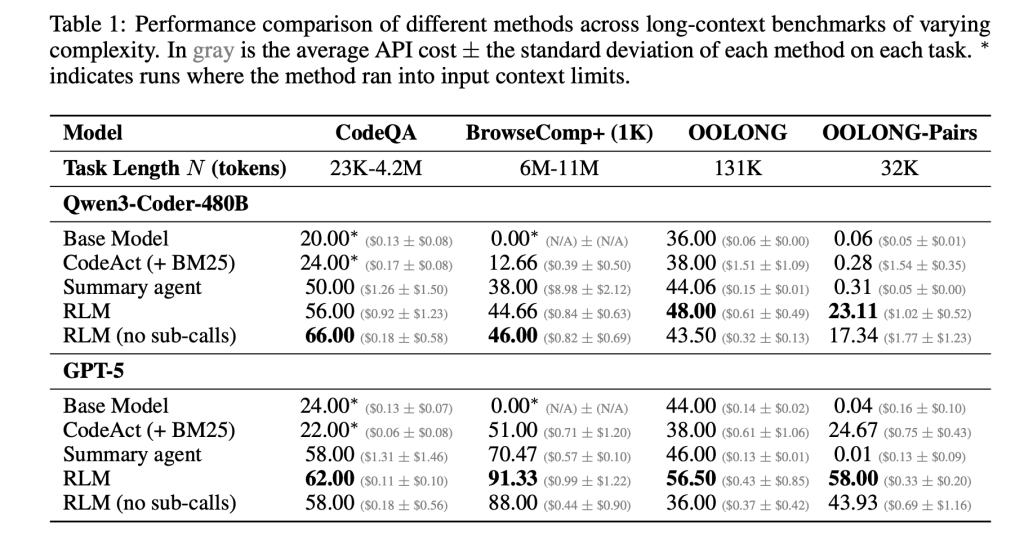

The research paper evaluates this concept on 4 lengthy context benchmarks with completely different computational construction. S-NIAH is a continuing complexity needle in a haystack process. BrowseComp-Plus is a multi hop internet fashion query answering benchmark over as much as 1,000 paperwork. OOLONG is a linear complexity lengthy context reasoning process the place the mannequin should rework many entries after which mixture them. OOLONG Pairs will increase the problem additional with quadratic pairwise aggregation over the enter. These duties stress each context size and reasoning depth, not solely retrieval.

On these benchmarks, RLMs give massive accuracy beneficial properties over direct LLM calls and customary lengthy context brokers. For GPT-5 on CodeQA, an extended doc query answering setup, the bottom mannequin reaches 24.00 accuracy, a summarization agent reaches 41.33, whereas RLM reaches 62.00 and the RLM with out recursion reaches 66.00. For Qwen3-Coder-480B-A35B, the bottom mannequin scores 20.00, a CodeAct retrieval agent 52.00, and the RLM 56.00 with a REPL solely variant at 44.66.

The beneficial properties are largest on the toughest setting, OOLONG Pairs. For GPT-5, the direct mannequin is sort of unusable with F1 equal to 0.04. Summarization and CodeAct brokers sit close to 0.01 and 24.67. The complete RLM reaches 58.00 F1 and the non recursive REPL variant nonetheless achieves 43.93. For Qwen3-Coder, the bottom mannequin stays under 0.10 F1, whereas the complete RLM reaches 23.11 and the REPL solely model 17.34. These numbers present that each the REPL and recursive sub calls are vital on dense quadratic duties.

BrowseComp-Plus highlights efficient context extension. The corpus ranges from about 6M to 11M tokens, which is 2 orders of magnitude past the 272k token context window of GPT-5. RLM with GPT 5 maintains sturdy efficiency even when given 1,000 paperwork within the surroundings variable, whereas commonplace GPT-5 baselines degrade as doc rely grows. On this benchmark, RLM GPT 5 achieves round 91.33 accuracy with a median value of 0.99 USD per question, whereas a hypothetical mannequin that reads the complete context instantly would value between $1.50 and $2.75 at present pricing.

The research paper additionally analyzes the trajectories of RLM runs. A number of habits patterns emerge. The mannequin typically begins with a peek step the place it inspects the primary few thousand characters of the context. It then makes use of grep fashion filtering with regex or key phrase search to slim down related traces. For extra complicated queries, it partitions the context into chunks and calls recursive LMs on every chunk to carry out labeling or extraction, adopted by programmatic aggregation. On lengthy output duties, the RLM shops partial outputs in variables and stitches them collectively, which bypasses output size limits of the bottom mannequin.

The brand new take from Prime Mind

Prime Mind group has turned this idea right into a concrete surroundings, RLMEnv, built-in of their verifiers stack and Environments Hub. Of their design, the principle RLM has solely a Python REPL, whereas sub LLMs obtain the heavy instruments resembling internet search or file entry. The REPL exposes an llm_batch operate so the foundation mannequin can fan out many sub queries in parallel, and an reply variable the place the ultimate answer should be written and flagged as prepared. This isolates token heavy software outputs from the principle context and lets the RLM delegate costly operations to sub fashions.

Prime Intellect evaluates this implementation on 4 environments. DeepDive assessments internet analysis with search and open instruments and really verbose pages. Math python exposes a Python REPL for tough competitors fashion math issues. Oolong reuses the lengthy context benchmark inside RLMEnv. Verbatim copy focuses on actual replica of complicated strings throughout content material varieties resembling JSON, CSV and blended codes. Throughout these environments, GPT-5-mini and the INTELLECT-3-MoE mannequin each achieve from the RLM scaffold in success fee and in robustness to very lengthy contexts, particularly when software output would in any other case swamp the mannequin context

The analysis paper’s creator group and Prime Mind group each stress that present implementations will not be totally optimized. RLM calls are synchronous, recursion depth is restricted and value distributions have heavy tails attributable to very lengthy trajectories. The actual alternative is to mix RLM scaffolding with devoted reinforcement studying in order that fashions study higher chunking, recursion and power utilization insurance policies over time. If that occurs, RLMs present a framework the place enhancements in base fashions and in methods design convert instantly into extra succesful lengthy horizon brokers that may devour 10M plus token environments with out context rot.

Key Takeaways

Listed here are 5 concise, technical takeaways you may plug underneath the article.

- RLMs reframe lengthy context as an surroundings variable: Recursive Language Fashions deal with your entire immediate as an exterior string in a Python fashion REPL, which the LLM inspects and transforms by way of code, as a substitute of ingesting all tokens instantly into the Transformer context.

- Inference time recursion extends context to 10M plus tokens: RLMs let a root mannequin recursively name sub LLMs on chosen snippets of the context, which permits efficient processing of prompts as much as about 2 orders of magnitude longer than the bottom context window, reaching 10M plus tokens on BrowseComp-Plus fashion workloads.

- RLMs outperform widespread lengthy context scaffolds on arduous benchmarks: Throughout S-NIAH, BrowseComp-Plus, OOLONG and OOLONG Pairs, RLM variants of GPT-5 and Qwen3-Coder enhance accuracy and F1 over direct mannequin calls, retrieval brokers resembling CodeAct, and summarization brokers, whereas protecting per question value comparable or decrease.

- REPL solely variants already assist, recursion is vital for quadratic duties: An ablation that solely exposes the REPL with out recursive sub calls nonetheless boosts efficiency on some duties, which exhibits the worth of offloading context into the surroundings, however full RLMs are required to realize massive beneficial properties on info dense settings resembling OOLONG Pairs.

- Prime Mind operationalizes RLMs by way of RLMEnv and INTELLECT 3: Prime Mind group implements the RLM paradigm as RLMEnv, the place the foundation LM controls a sandboxed Python REPL, calls instruments through sub LMs and writes the ultimate consequence to an

replyvariable, and reviews constant beneficial properties on DeepDive, math python, Oolong and verbatim copy environments with fashions resembling INTELLECT-3.

Try the Paper and Technical details. Additionally, be happy to comply with us on Twitter and don’t neglect to affix our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its reputation amongst audiences.